Same here! I’m happy to see the UBports fork is still active as Lomiri, I haven’t checked it out in a while.

nek0d3r

- 0 Posts

- 52 Comments

2·4 days ago

2·4 days agoI can’t wait until someone cracks it and I can just use it as my go-to source for Nintendo music storage.

…(in Minecraft)

KDE, because despite my bitterness for the loss of Unity 8, I know it’s merely nostalgia for me. I want something I feel like I can make my own without too much difficulty.

I’m a little of both, I joined for escaping the reddit blackout shutdown, but I stayed for the advantages of the fediverse. I grew up working with a lot of proprietary software, and I’ve had growing pains as I’ve grown bitter about proprietary software over time. I’ve been self hosting, working on migrating my machines to Linux, and trying to find workable alternatives to everything.

Edit: yes I’m quite techy, a DevSecOps/software engineer. I worked with Linux a long time through VMs and containers, but gaming and Adobe kept me from having a daily driver machine for more than a little while. I don’t think I’ll ever fully escape Windows because I’m a big .NET developer and work with a lot of legacy code, but I’m more than happy to leave that to a QEMU VM.

5·25 days ago

5·25 days agoI have a long term project to migrate my machines, and the introduction of recall pressured me to move faster, but I still have some hurdles to overcome that just require a time sink on my part.

Alright this has me giggling this morning

151·30 days ago

151·30 days agoI completely understand where this is coming from, but I’m just a little confused about what the solution would be. For the average consumer and certainly the target users for Windows, shipping with a browser is the expected norm, and none are expected to open a terminal, much less run tools like winget. I guess you could have a setup dialog of major browsers to choose from?

Now that Citra isn’t available, Nintendo knows I have no choice but to buy Samus Returns on my Switch!

This has been a dream of mine and one of my friend’s as well. There’s a small handful of blockers that I’ve slowly been transitioning but the upcoming windows pain points you mentioned are definitely recent motivators for me. I’m glad you made it and I hope the rest of us can too! I look forward to reading more about your experience.

In general I’d agree, although Citra feels like an exception. I’m not quite sure why they targeted that one so hard.

4·1 month ago

4·1 month agoI’ve had almost all my posts on Reddit go up in smoke for one pedantic reason or another. I haven’t posted here much out of that fear but I think it’s much better here.

1·2 months ago

1·2 months agoDo you have a source for Search Generative Experience using a separate model? As far as I’m aware, all of Google’s AI services are powered by the Gemini LLM.

2·2 months ago

2·2 months agoI feel you man lmao

6·2 months ago

6·2 months agoThe last I had heard of this were articles months in saying it was still not fixed, but this doesn’t invalidate my point. It may have been retrained to respond otherwise, but it spouts garbled inputs.

312·2 months ago

312·2 months agoGenerative AI does not work like this. They’re not like humans at all, it will regurgitate whatever input it receives, like how Google can’t stop Gemini from telling people to put glue in their pizza. If it really worked like that, there wouldn’t be these broad and extensive policies within tech companies about using it with company sensitive data like protection compliances. The day that a health insurance company manager says, “sure, you can feed Chat-GPT medical data” is the day I trust genAI.

What can I say, he’s smarter than the a-ver-age медведь!

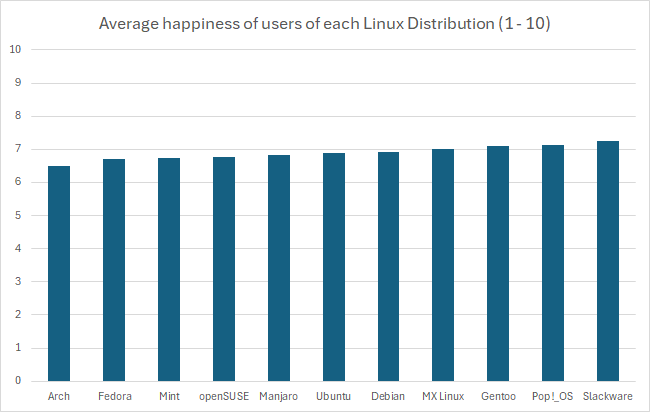

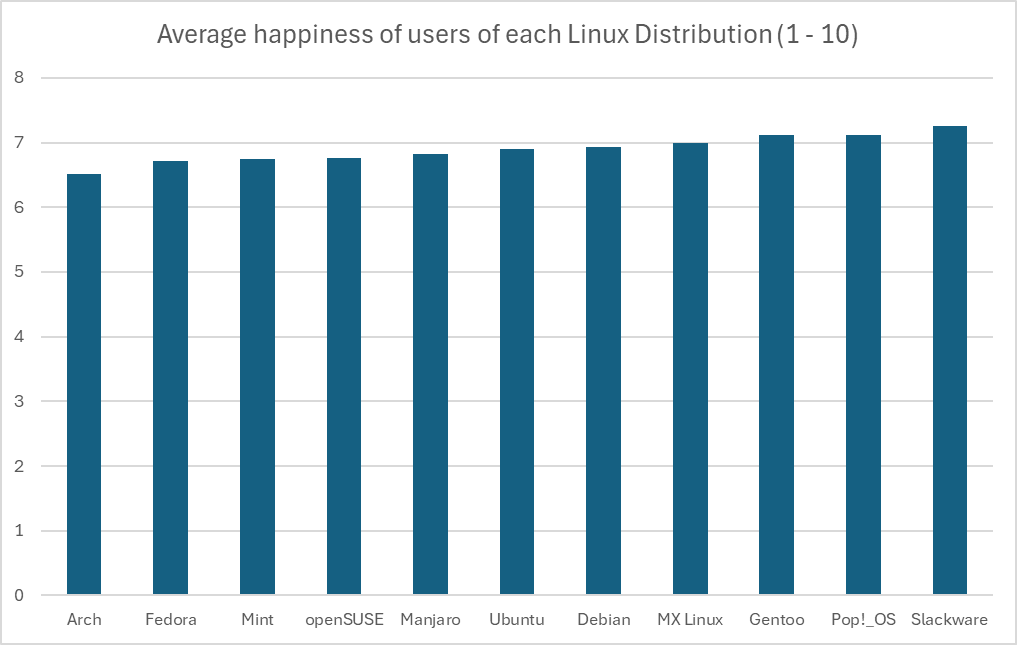

That’s basically it. Some Arch users are genuinely just picky about what they want on their system and desire to make their setup as minimal as possible. However, a lot of people who make it their personality just get a superiority complex over having something that’s less accessible to the average user.

4·3 months ago

4·3 months agoThere’s not a lot of data to work with, and the kind of test used to determine significance is not the same across the board, but in this case you can do an analysis of variance. Start with a null hypothesis that the happiness level between distros are insignificant, and the alternative hypothesis is that they’re not. Here are the assumptions we have to make:

- An alpha value of 0.05. This is somewhat arbitrary, but 5% is the go-to threshold for statistical significance.

- A reasonable sample size of users tested for happiness, we’ll go with 100 for each distro.

- A standard deviation between users in distro groups. This is really hard to know without seeing more data, but as long as the sample size was large enough and in a normal distribution, we can reasonably assume s = 0.5 for this.

We can start with the total mean, this is pretty simple:

(6.51 + 6.71 + 6.74 + 6.76 + 6.83 + 6.9 + 6.93 + 7 + 7.11 + 7.12 + 7.26) / 11 = 6.897Now we need the total sum of squares, the squared differences between each individual value and the overall mean:

Arch: (6.51 - 6.897)^2 = 0.150 Fedora: (6.71 - 6.897)^2 = 0.035 Mint: (6.74 - 6.897)^2 = 0.025 openSUSE: (6.76 - 6.897)^2 = 0.019 Manjaro: (6.83 - 6.897)^2 = 0.005 Ubuntu: (6.9 - 6.897)^2 = 0.00001 Debian: (6.93 - 6.897)^2 = 0.001 MX Linux: (7 - 6.897)^2 = 0.011 Gentoo: (7.11 - 6.897)^2 = 0.045 Pop!_OS: (7.12 - 6.897)^2 = 0.050 Slackware: (7.26 - 6.897)^2 = 0.132This makes a total sum of squares of 0.471. With our sample size of 100, this makes for a sum of squares between groups of 47.1. The degrees of freedom for between groups is one less than the number of groups (

df1 = 10).The sum of squares within groups is where it gets tricky, but using our assumptions, it would be:

number of groups * (sample size - 1) * (standard deviation)^2Which calculates as:

11 * (100 - 1) * (0.5)^2 = 272.25The degrees of freedom for this would be the number of groups subtracted from the sum of sample sizes for every group (

df2 = 1089)Now we can calculate the mean squares, which is generally the quotient of the sum of squares and the degrees of freedom:

# MS (between) 47.1 / 10 = 4.71 // Doesn't end up making a difference, but just for clarity # MS (within) 272.25 / 1089 = 0.25Now the F-statistic value is determined as the quotient between these:

F = 4.71 / 0.25 = 18.84To not bog this down even further, we can use an F-distribution table with the following calculated values:

- df1 = 10

- df2 = 1089

- F = 18.84

- alpha = 0.05

According to the linked table, the F-critical value is between 1.9105 and 1.8307. The calculated F-statistic value is higher than the critical value, which is our indication to reject the null hypothesis and conclude that there is a statistical significance between these values.

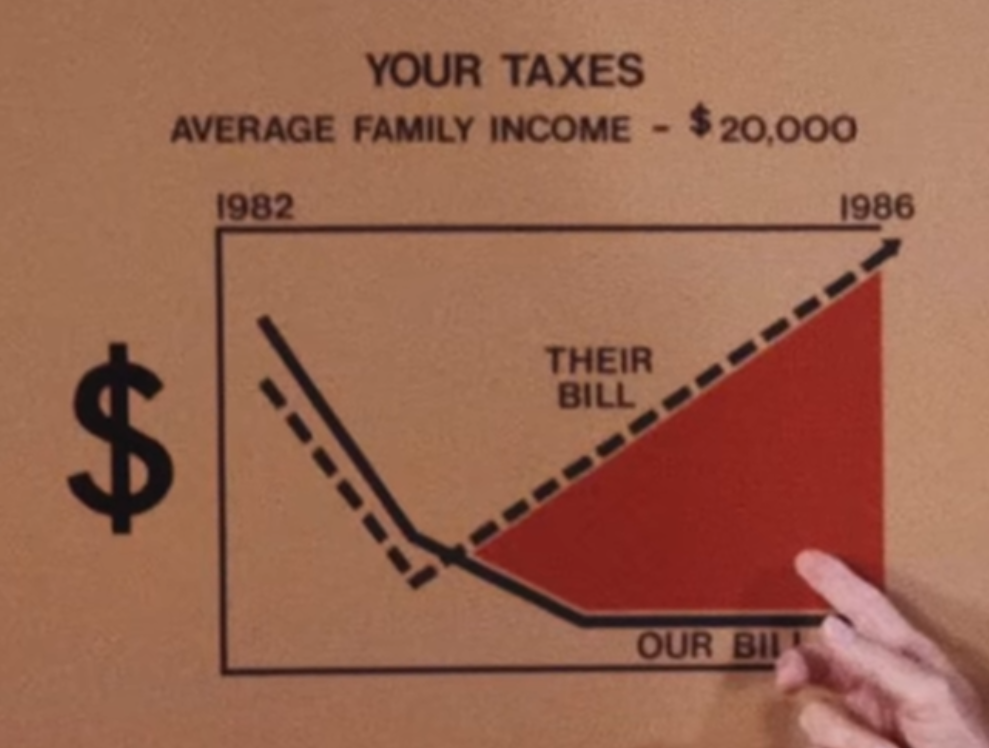

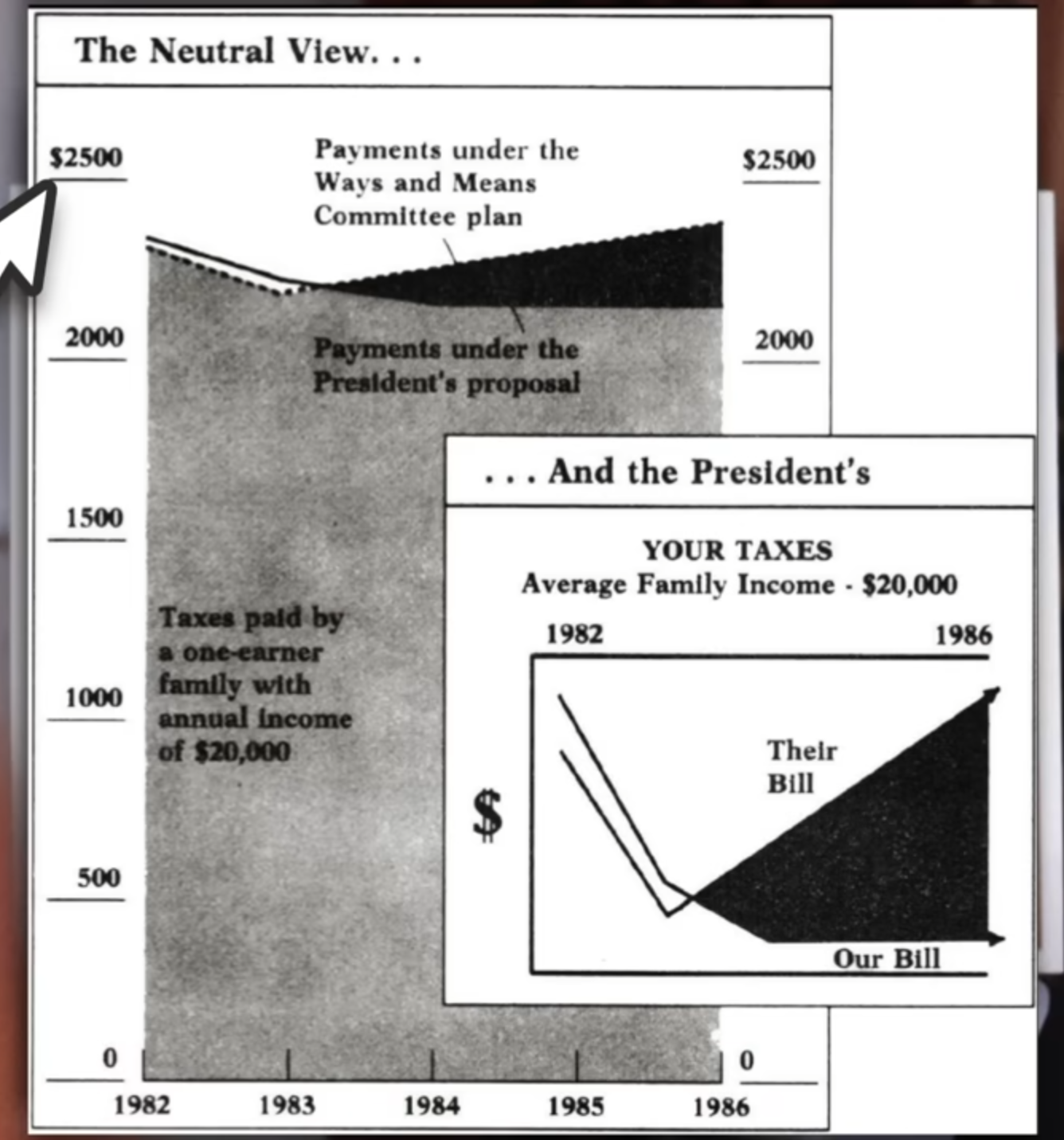

However, again you can see above just how many assumptions we had to make, that the distribution of the data within each group was great in number and normally varied. There’s just not enough data to really be sure of any of what I just did above, so the only thing we have to rely on is the representation of the data we do have. Regardless of the intentions of whoever created this graph, the graph itself is in fact misrepresent the data by excluding the commonality between groups to affect our perception of scale. There’s a clip I made of a great example of this:

There’s a pile of reasons this graph is terrible, awful, no good. However, it’s that scale of the y-axis I want to focus on.

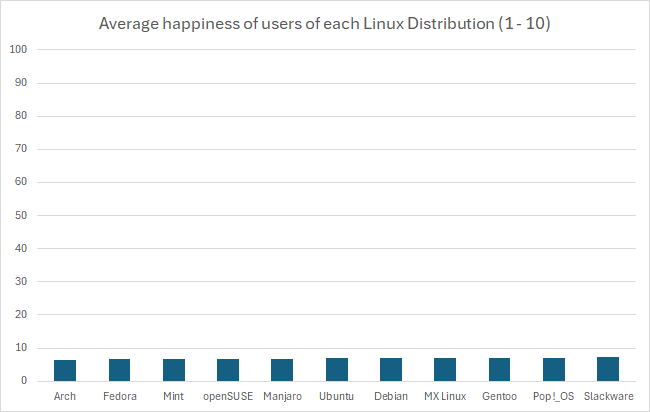

This is an egregious example of this kind of statistical manipulation for the point of demonstration. In another comment I ended up recreating this bar graph with a more proper scale, which has a lower bound of 0 as it should. It’s suggested that these are values out of 10, so that should be the upper bound as well. That results in something that looks like this:

In fact, if you wanted you could go the other way and manipulate data in favor of making something look more insignificant by choosing a ridiculously high upper bound, like this:

But using the proper scale, it’s still quite difficult to tell. If these numbers were something like average reviews of products, it would be easy in that perspective to imagine these as insignificant, like people are mostly just rating 7/10 across the board. However, it’s the fact that these are Linux users that makes you imagine that the threshold for the differences are much lower, because there just aren’t that many Linux users, and opinions wildly vary between them. This also calls into question how that data was collected, which would require knowing how the question was asked, and how users were polled or tested to eliminate the possibility of confounding variables. At the end of the day I just really could not tell visually if it’s significant or not, but that graph is not a helpful way to represent it. In fact, I think Excel might be to blame for this kind of mistake happening more commonly, when I created the graph it defaulted the lower bound to 6. I hope this was helpful, it took me way too much time to write 😂

3·3 months ago

3·3 months agoJust to kind of demonstrate that idea, I’ve recreated the graph in Excel with the axis starting at 0. I think Excel might actually be to blame for this happening so much, its auto selection actually wanted to pick 6, gross.

When I read “fully autonomous”, I see how creepy its movements are and just imagine it seizing its moment, getting on all fours and charging someone. You could make a horror movie out of this lol