- 0 Posts

- 7 Comments

3·1 year ago

3·1 year agoLike the SEAL who became a doctor and then went to space. Don’t feel like you have to limit yourself, you know?

I watched it in Vim, mostly because I don’t hate myself enough.

Not to spoil the ending either, but the nuclear device goes off because Oppenheimer smashes a bunch of keys and then finally remembers :wq.

51·1 year ago

51·1 year agoTech doesn’t really self select for well balanced, socially confident, neurologically normal folks.

I’m sure those people are in tech and have success as well, but the stereotype of the “hacker nerd” didn’t spring out of nothing. The obsessiveness and desire to be right and know everything that make IT geniuses can also make those same folks really, really hard to be around.

People that are ostracized for their socially aberrant behavior usually (not always!) have sympathy for other outcast groups, whatever the reason.

And you’re right, too - writing code is sort of one of those ultimate bullshit tests - either it works, or it doesn’t. Computers don’t care about your pedigree or your appearance or even your personality. Nice guys who write shit code might have management or product team in their future, but they don’t usually write code for very long. But good devs are hard to find, so even the most straight laced companies are willing to bend a bit when it comes to talented developers.

My $.02, and worth every penny 😂

2·1 year ago

2·1 year agoJust because it’s ‘the hot new thing’ doesn’t mean it’s a fad or a bubble. It doesn’t not mean it’s those things, but…the internet was once the ‘hot new thing’ and it was both a bubble (completely overhyped at the time) and a real, tidal wave change to the way that people lived, worked, and played.

There are already several other outstanding comments, and I’m far from a prolific user of AI like some folks, but - it allows you to tap into some of the more impressive capabilities that computers have without knowing a programming language. The programming language is English, and if you can speak it or write it, AI can understand it and act on it. There are lots of edge cases, as others have mentioned below, where AI can come up with answers (by both the range and depth of its training data) where it’s seemingly breaking new ground. It’s not, of course - it’s putting together data points and synthesizing an output - but even if mechanically it’s 2 + 3 = 5, it’s really damned impressive if you don’t have the depth of training to know what 2 and 3 are.

Having said that, yes, there are some problematic components to AI (from my perspective, the source and composition of all that training data is the biggest one), and there are obviously use cases that are, if not problematic in and of themselves, at very least troubling. Using AI to generate child pornography would be one of the more obvious cases - it’s not exactly illegal, and no one is being harmed, but is it ethical? And the more societal concerns as well - there are human beings in a capitalist system who have trained their whole lives to be artists and writers and those skills are already tragically undervalued for the most part - do we really want to incentivize their total extermination? Are we, as human beings, okay with outsourcing artistic creation to this mechanical turk (the concept, not the Amazon service), and whether we are or we aren’t, what does it say about us as a species that we’re considering it?

The biggest practical reasons to not get too swept up with AI is that it’s limited in weird and not totally clearly understood ways. It ‘hallucinates’ data. Even when it doesn’t make something up, the first time that you run up against the edges of its capabilities, or it suggests code that doesn’t compile or an answer that is flat, provably wrong, or it says something crazy or incoherent or generates art that features humans with the wrong number of fingers or bodily horror or whatever…well then you realize that you should sort of treat AI like a brilliant but troubled and maybe drug addicted coworker. Man, there are some things that it is just spookily good at. But it needs a lot of oversight, because you can cross over from spookily good to what the fuck pretty quickly and completely without warning. ‘Modern’ AI is only different from previous AI systems (I remember chatting with Eliza in the primordial moments of the internet) because it maintains the illusion of knowing much, much better.

Baseless speculation: I think the first major legislation of AI models is going to be to require an understanding of the training data and ‘not safe’ uses - much like ingredient labels were a response to unethical food products and especially as cars grew in size, power, and complexity the government stepped in to regulate how, where, and why cars could be used, to protect users from themselves and also to protect everyone else from the users. There’s also, at some point, I think, going to be some major paradigm shifting about training data - there’s already rumblings, but the idea that data (including this post!) that was intended for consumption by other human beings at no charge could be consumed into an AI product and then commercialized on a grand scale, possibly even at the detriment of the person who created the data, is troubling.

2·1 year ago

2·1 year agoThis was my first thought.

I do this for a living and it’s literally built into Linux.

Set their permissions carefully, ensure that the permission set does what you want (and not a bunch of stuff you don’t want), and keep on keeping on.

I think sometimes the difference between strongly typed and loosely typed is easier to explain in terms of workflow. It’s possible (note: not necessarily a good idea, but possible) to start writing a function in vanilla JavaScript without any real idea of what it’s going to output. Is it going to return a string? Is it going to return null, because it’s performing some action to a variable? Or is it going to return that variable? And vanilla JS is good with that.

But that same looseness that can be kind of useful when you’re brainstorming or problem solving can result in unexpected behavior. For instance, I write a function that prepares a string for additional processing. For the purposes of this conversation, let’s say that it removes white spaces and any non-letter or number characters, and then returns the transformed string.

What happens if I feed a number (integer) to this function instead of a string? What about a number with a decimal (float)? Does my function (and/or JavaScript) treat them as literal strings? Does it remove the decimal from the float, since it’s a non-letter, non-number value?

Without Typescript, you have to either a) write your code in such a way that you ensure that the situation never comes up (i.e. by validating inputs, or by being very careful about when and how this function is used), or b) you have to handle those weird cases, which turns a cute little three line function into a 30 line monster with a switch case and typecasting. And truthfully, on any project ‘at scale’ you’re going to have to do both.

With Typescript, you specify that this function takes in a string, and returns a string, and that’s it. Then, when another dev (or you, in six weeks when you’ve forgotten all about this function that you wrote) tries to send in a boolean, or a float, or an array of strings, Typescript is going to blow up. Nope. Can’t do that. And they (it’s more fun to think of it as future you) will have to follow the rules that you put in place by writing the function the way you did, which just means being careful enough to cast a float or integer into a string before passing it, or concatenating an array, or whatever.

Strongly typed languages expect you to write functions with the end state you’re expecting in mind. You’ve already got the solution in your head before you start typing, at least enough to know what type of data goes in and what type of data goes out.

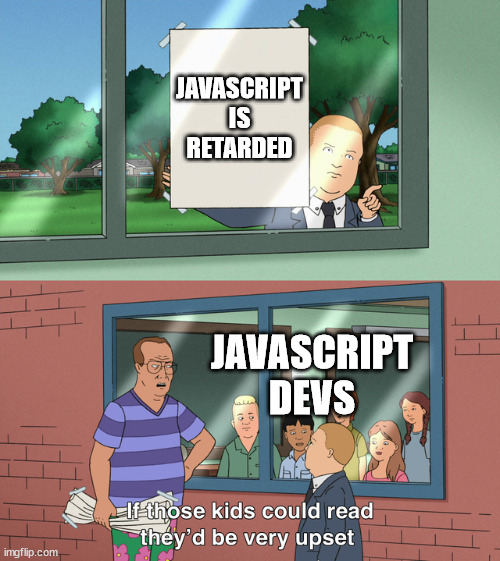

It also hides (but doesn’t totally get rid of!) some of the sillier things that JavaScript does because it’s not strongly typed. True + True should probably never equal 2.

As to OP’s question, though - if you know how to do things in JavaScript (.trim(), for example), then you know how to do a thing in Typescript. Manipulating data in JavasScript and manipulating data in TypeScript is identical - but TypeScript is also watching what the types of the different data types being used, and throwing up the BS flag when you get too loose with what you’re doing (or don’t think through the consequences of your design decisions).