- 15 Posts

- 22 Comments

Plenty. If you scroll down, there’s tens of research articles linked. You just have to click on the circles for most of the articles :-)

Here’s an excerpt from the bottom of the article’:

The most conclusive long-term study on sleep training to date is a 2012 randomized controlled trial on 326 infants, which found no difference on any measure—negative or positive—between children who were sleep trained and those who weren’t after a 5 year follow up. The study includes measurements of sleep patterns, behavior, cortisol levels, and, importantly, attachment.

11·3 months ago

11·3 months agoThat’s an interesting point. But maybe there are some compounds that can induce a state that fools people who’ve never tried psychoactive compounds? I’ve heard of studies using dehydrated water as a placebo for alcohol as it induces some of the same effects:

Like ethanol, heavy water temporarily changes the relative density of cupula relative to the endolymph in the vestibular organ, causing positional nystagmus, illusions of bodily rotations, dizziness, and nausea. However, the direction of nystagmus is in the opposite direction of ethanol, since it is denser than water, not lighter.

2·3 months ago

2·3 months agoTo a certain extent I agree, but I also think it’s a tricky topic that deals a fair bit with the ethics of medicine. The Atlantic has a pretty good article with arguments for and against: https://web.archive.org/web/20230201192052/https://www.theatlantic.com/health/archive/2011/12/the-placebo-debate-is-it-unethical-to-prescribe-them-to-patients/250161/

Yes, in your three situations, I’d agree that option C is the best one. But you’re disregarding a major component of any drug: side effects. Presumably ecstasy has some nonnegligible side effects so just looking at the improvement on the treated disease might now show the full picture

3·3 months ago

3·3 months agoI agree that it’s a shame that it’s so difficult to eliminate the placebo effect from psychoactive drugs. There’s probably alternative ways of teasing out the effect, if any, from MDMA therapy, but human studies take a long time and, consequently, costs a lot of money. I’d imagine the researchers would love to do the studies, but doesn’t have the resources for it

I think the critique about conflicts of interest seems a bit misguided. It’s not the scientists who doesn’t want to move further with this. It’s the FDA

12·3 months ago

12·3 months agoBut if they know they’re getting ecstasy, the improvement might originate from placebo which means that they’re not actually getting better from ecstasy. They’re just getting better because they think they should be getting better

2·3 months ago

2·3 months agoI think those are all good questions that I don’t think anyone really have conclusive answers to (yet). Hopefully the researchers will have the funds in the future to investigate those and more!

16·3 months ago

16·3 months agoFrom the article:

Squeezed in alongside their main projects, the investigation took eight years and included dozens of participants. The results, published in 2016, were revelatory [1]. Two to three months after giving birth, multiple regions of the cerebral cortex were, on average, 2% smaller than before conception. And most of them remained smaller two years later. Although shrinkage might evoke the idea of a deficit, the team showed that the degree of cortical reduction predicted the strength of a mother’s attachment to her infant, and proposed that pregnancy prepares the brain for parenthood.

121·3 months ago

121·3 months agoI think that hypothesis still holds as it has always assumed training data of sufficient quality. This study is more saying that the places where we’ve traditionally harvested training data from are beginning to be polluted by low-quality training data

161·3 months ago

161·3 months agoFrom the article:

To demonstrate model collapse, the researchers took a pre-trained LLM and fine-tuned it by training it using a data set based on Wikipedia entries. They then asked the resulting model to generate its own Wikipedia-style articles. To train the next generation of the model, they started with the same pre-trained LLM, but fine-tuned it on the articles created by its predecessor. They judged the performance of each model by giving it an opening paragraph and asking it to predict the next few sentences, then comparing the output to that of the model trained on real data. The team expected to see errors crop up, says Shumaylov, but were surprised to see “things go wrong very quickly”, he says.

20·3 months ago

20·3 months agoWhat they see as “bad research” is looking at an older cohort without taking into consideration their earlier drinking habits - that is, were they previously alcoholics or did they generally have other problems with their health?

If you don’t correct for these things, you might find that people who are not drinking seems less healthy than people who are. BUT, that’s not because they’re not drinking, it’s just because of their preexisting conditions. Their peers who are drinking a little bit tend to not have these preexisting conditions (on average)

17·4 months ago

17·4 months agoHere’s an actual explanation of the ‘sneaked reference’:

However, we found through a chance encounter that some unscrupulous actors have added extra references, invisible in the text but present in the articles’ metadata, when they submitted the articles to scientific databases. The result? Citation counts for certain researchers or journals have skyrocketed, even though these references were not cited by the authors in their articles.

2·7 months ago

2·7 months agoThank you, those are some good points!

5·7 months ago

5·7 months agoCould you explain a bit more about why it’s insane to have it as a docked volume instead of a mount point on the host? I’m not too well-versed with docker (or maybe hosting in general)

Edit: typo

Interesting that they have such a greedy/stupid bot

Could it be this fella who’s hitting you up: https://claude.ai/login

1·9 months ago

1·9 months agoYea, not the most clear title about what the article is about hahah

Could you link some of those studies? I’d be interested to read more about that

51·1 year ago

51·1 year agoBut that study was done on people aged 65+ for 11 weeks? I mean, sure, they didn’t measure any significant changes to the brain, but that doesn’t preclude changes forever. 11 weeks is not long to practice a language

3·1 year ago

3·1 year agoFrom the method section of the paper:

Materials and Property Characterization. From a popular US chain store, two brands of baby food containers made of polypropylene and one brand of reusable food pouch with- out material information on the label were purchased. The selection of polypropylene containers was based on its widespread use in baby food packaging. These choices aimed to showcase diverse types of baby food packaging. The food containers and the food pouch were analyzed for their semicrystalline structure and thermal stability by DSC using a Q200 differential scanning calorimeter (TA Instruments, New Castle, DE). Briefly, a small sample weighing between 3 and 8 mg was taken from each container or pouch, placed in a DSC aluminum pan/lid assem- bly, and crimped with a press. The samples were heated and cooled at a rate of 10 °C/min under a nitrogen atmosphere, resulting in calori- metric curves that indicate the heat transfer to and from the polymer sample during the thermal cycle, which was used to monitor phase transitions. H u s s a i n e t a l . i n E n v i r o n . S c i . T e c h n o l . 5 7 ( 2 0 2 3 ) 5 Transmission wide-angle X-ray diffraction (WAXD) of the reusable food pouch was performed at the 12-ID-B beamline at the Advanced Pho- ton Source (Argonne National Laboratory), using incident X-rays with energy 13.30 keV and a Pilatus 300k 2D detector mounted 0.4 m from the sample. WAXD patterns of the two plastic containers were acquired in reflection geometry with a Bruker-AXS D8 Discover equipped with a Cu Kα lab source (λ = 1.5406 A) and a Vantec 500 area detector. In all cases, the acquired 2D patterns were radially averaged to produce 1D intensity (I) vs scattering vector (q) plots

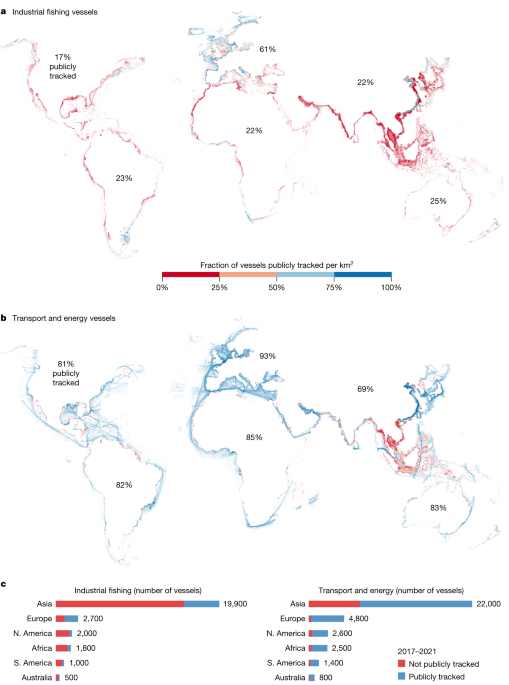

Ahh that’s wack. The article it’s based on is open-access: https://www.nature.com/articles/s41586-024-07856-5