For your convenience:

The researchers pointed out that the vulnerability cannot be exploited remotely. An attacker can trigger the issue by providing crafted inputs to applications that employ these [syslog] logging functions [in apps that allow the user to feed crafted data to those functions].

This is a privilege escalation.

The hero we need."; DROP TABLE “users”;

If it isn’t little Bobby Tables again.

This may be difficult to exploit in practice - I don’t think most user applications use syslog.

Unless you have user access to a system with gcc on it.

You still need some privileged process to exploit. Glibc code doesn’t get any higher privileges than the rest of the process. From kernel’s point of view, it’s just a part of the program like any other code.

So if triggering the bug in your own process was enough for privilege escalation, it would also be a critical security vulnerability in the kernel - it can’t allow you to execute a magic sequence of instructions in your process and become a root, that completely destroys any semblance of process / user isolation.

"A qsort vulnerability is due to a missing bounds check and can lead to memory corruption. It has been present in all versions of glibc since 1992. "

This one amazes me. Imagine how many vulnerabilities future researchers will discover in ancient software that persisted/persist for decades.

That’s not the main part of the article, just a footnote, for anyone wondering.

The flaw resides in the glibc’s syslog function, an attacker can exploit the flaw to gain root access through a privilege escalation.

The vulnerability was introduced in glibc 2.37 in August 2022.

So, it must be with the BSDs too?

BSDs use libc

Iirc bad does not use glibc, but I’m not very involved with BSD.

It wouldn’t make sense. Glibc is LGPL licensed, not really compatible with the BSD license…

Wait, why has a compiler system log functionlity?

glibc is a library, gcc is the compiler.

You are probably confusing the glibc with gcc and g++. Glibc is an implementation of the C standard library, made by GNU (thats where the g in the name comes from).

If you were to look into it, it uses the syscalls to tell the underlying computer system what to do when you call functions, such as

printf.If you want to read more, see here

C is just crazy. You accidentally forget to put the bounds in a sorting function, and now you are root.

According to the link in the article, the qsort() bug can only be triggered with a non-transitive cmp() function. Would such a cmp function ever be useful?

You don’t necessarily have to write a non-transitive cmp() function willingly, it may happen that you write one without realizing due to some edge cases where it’s not transitive.

Security-critical C and memory safety bugs. Name a more iconic duo…

I’d have kinda preferred for public disclosure to have happened after the fix propagated to distros. Now we get to hurry the patch to end-users which isn’t always easily possible. Could we at least have a coordinated disclosure time each month? That’d be great.

Public disclosure is typically done 90 days after Deva are privately notified. That should be enough time for security-critical bugs.

They did follow that. You can read their disclosure timeline in their report.

Problem is that the devs of glibc aren’t the only people interested in getting glibc patched but us distro maintainers too.

What I would have preferred would be an early private disclosure to the upstream maintainers and then a public but intentionally unspecific disclosure with just the severity to give us distro people some time to prepare a swift rollout when the full disclosure happens and the patch becomes public.

Alternatively, what would be even better would have been to actually ship the patch in a release but not disclose its severity (or even try to hide it by making it seem like a refactor or non-security relevant bugfix) until a week or two later; ensuring that any half-decent distro release process and user upgrade cycle will have the patch before its severity is disclosed. That’s how the Linux kernel does it AFAIK and it’s the most reasonable approach I’ve seen.

deleted by creator

I’m afraid I don’t understand what you’re trying to say.

I am not sure as well, but maybe they meant “maybe an early and public disclosure increases the urgency of the fix for the developers”?

There have been cases [1] where vulnerabilities in software have been found, and the researcher that found it will contact the relevant party and nothing comes of it.

What they’re suggesting is that the researcher who discovered this might have already disclosed this in private, but felt that it wasn’t being patched fast enough, so they went public.

The solution here generally afaik is to give a specific deadline before you go public. It forces the other party to either patch it, or see the problem happen when they go live. 90 days is the standard timeframe for that since it’s enough time to patch and rollout, but still puts pressure on making it happen.

glibc is great, but holy shit the source code is obscured into oblivion, so hard to understand, with hardcoded optimizations, and compiler optimizations. I understand how difficult is to find vulnerabilities. A bit sad that the only C lib truely free software is so hard to actually read its code or even contribute to it.

For what it’s worth, glibc is very much performance-critical, so this shouldn’t be a surprise. Any possible optimization is worth it.

There are a ton of free software libc implementations outside of glibc. I think most implementations of libc are free software at this point. There’s Bionic, the BSD libcs, musl, the Haiku libc, the OpenSolaris/OpenIndiana libc, Newlib, relibc, the ToaruOS libc, the SerenityOS libc and a bunch more. Pretty sure Wine/ReactOS also have free implementations of the Windows libc.

Glibc has extensions that fragment compatibility. If Glibc is replaced by another libc, some apps prints an error, or don’t work. I noticed that on Alpine.

Eventually it’ll be easier to create a compatible drop-in replacement than maintain the decades old code, if it isn’t already

Unlikely, unless you drop backwards compatibility for undefined behaviour. Unless you write a complete specification on it, you’ll end up either breaking old stuff, or slowly rebuilding the same problems.

Like what’s happening to X. Wayland is replacing it.

Wayland is not a drop-in replacement tho. It’s like if glibc developers declared it obsolete and presented a “replacement” that has a completely different API and has 1/100 of glibc functionality and a plugin interface. And then all the dozens of Linux distros have to write all the plugins from scratch to add back missing functionality and do it together in perfect cooperation so that they remain compatible with each other.

Debian (versions 12 and 13), Ubuntu (23.04 and 23.10), and Fedora (37 to 39). Other distributions are probably also impacted.

Patch time

12 and 13 have patches out in the security repo. Apt update && apt upgrade fixed it right up.

https://security-tracker.debian.org/tracker/CVE-2023-6246

Don’t know if Fedora has any similar easy way of tracking vulnerabilities

Imagine all the vulnerabilities that privative and low reviewed code has…

Let’s go Musl!

meanwhile rust

Doesn’t compile where musl does.

While there may be challenges and specific configurations required, you absolutely can compile Rust on and targeting to a musl-based system.

I meant, Rust suppports vewer architectures.

Rust actually supports most architectures(SPARC, AMD64/x86-64, ARM, PowerPC, RISC-V, and AMDGPU*). The limitations are from LLVM not supporting some architects(Alpha, SuperH, and VAX) and some instruction sets(sse2, etc.); z/Architecture is a bit of an outlier that has major challenges to overcome for LLVM-Rust. This is not going to be a problem when GCC-Rust is finished.

AMDGPU, *Not 100%, but works well enough to actually use in production and gets better all the time.

Thanks! My bad, sorry.

Np. It’s a common point of confusion.

You can userustup target listto see all available architectures and targets.

Yeah Musl is pretty good to learn C libs but the main red flag is the MIT Licence

Why? MIT is more liberal than GPL. Why is it a red flag?

At a time where proprietary software is becoming more and more nasty and prevalent, on top of what has always accompanied it, we shouldn’t be letting proprietary software developers take advantage of free code only to lock theirs away as proprietary. It advantages nobody except proprietary devs, who don’t have the users at heart.

The goal of the GPL is to fight that.

The problem with pushover licenses like MIT in this case is that a lot of this software is designed to displace copylefted code. And when it does, you lose the protection of copyleft in that particular area and all of a sudden proprietary software gets a leg up on us. It’s bad for software freedom and the users by extension, and good for proprietary software developers.

But the licence is chosen by the software author - unless that right to choose is taken away by a viral licence like the GPL, of course. In any case, I licence everything I write that I can as 3-clause BSD because I don’t give a fuck. I wrote the software for me, and it costs me nothing if it’s used by a shitty proprietary software stealer, or a noble OSS developer. Neither of them are paying me.

OSS should, is, and eventually will drive for-pay software to extinction, and it should do it through merit, not some legal trickery.

You’re missing the point. Yes, the license is chosen by the author, but if that pushover licensed software becomes favoured over copyleft software, then proprietary software has a leg up as I explained. That’s it.

GPL is also not a “viral” license, because that would imply that it seeks out and infects anything it can find. But developers choose which code they use, it doesn’t just appear in their own code without permission. So a better analogy would be “spiderplant” license, since you’re taking part of a GPL program (a spiderplant), and putting it in your own (where the GPLs influence “grows” to). That is completely the software developers decision and not like a virus at all.

It might not cost you anything personally if a proprietary developer usurps your code, but it does cost overall user freedom and increases proprietary dominance, where copyleft licenses would have done the opposite if your code was worth using. For that reason, I like strong copyleft. But by all means, keep using the license you want to, as long as it’s not proprietary I won’t judge. This is just my thoughts.

The point of free/open source licenses isn’t to remove money from software either, it’s perfectly possible to sell libre software. It’s about what the recipients of that software have the freedom to do with it, and not giving the developer control over their users. We should serve the community, not betray them.

Lastly, although free alternatives are often technically superior to their closed-source competitors, at the end of the day, if you have a slightly faster program which does nasty spying, locking of functionality, etc, and a slightly slower program which does not, I’d be inclined to say that the slower program should be preferred by virtue that it does not do nasty things to it’s users, and that then you won’t be supporting such behaviour.

Lastly, although free alternatives are often technically superior to their closed-source competitors, at the end of the day

I am 100% in agreement with you here. While I’m not by any means a Libertarian, I prefer MIT and BSD licenses because they are truely free. The GPL is not: it removes freedoms. Now, you argue that limiting freedom can be a net good - we limit the freedom to rape and murder, and that’s good. I don’t agree that the freedoms the GPL removes are equivalent, and can indeed be harmful.

I don’t mind others using the GPL, but I won’t.

We can agree to disagree on the freedom point. The only “freedom” I see being taken away with the GPL is the removing of the freedom of other people.

I don’t mind the MIT/BSD licenses, but I won’t use them. We can agree on that.

I get is more liberal which I understand why. But that means that changes to the software do not need to be shared. Which for normal users it really does not matter. But again we are giving to multi corporations so much in exchange of nothing. When again they don’t treat their users the same way.

MIT is a good licence as an idea. In reality, multi corporations are evil AF. The idea of free software in a sense is that free software can get so much better than privative one, eventually forcing privative companies to implement it them self on their programs.

If giving and taking was 1:1 in software community then again, MIT license is perfect. In reality it isn’t. For major programs that have a lot of implication on new programs I do not recommend MIT and similar. For feature like projects is totally okay IMO.

I respect your opinion on this, and will say only one more thing: having worked in the corporate software space for decades, you don’t want their software. Most of it is utter crap. It’s a consequence of finance having too much indirect influence, high turnover, a lot of really uninspired and mediocre developers, and a lack of the fundamentally evolutionary pressures that exist in OSS. The only thing corporations do better is marketting.

The vulnerability is on logs, and that has nothing related to the library. Even less with the language…

The vulnerability is in the library’s logging function, which is coded in the C language. musl is also C (afaik), it’s just a more modern, safer rewrite of libc.

I’m not sure what you mean by a “vulnerability in the logs”. In a logger or parser, sure, but did you think text data at rest was able to reach out and attack your system?

True, the logging is part of the library, but it’s totally centered on what the developers are logging. It’s a bad practice to log sensitive information, which can be used by someone with access to the logs for sure, but that doesn’t mean the library is broken and has to be replaced. The library’s logs need to be audited, and this as true for glibc as it is for musl, no exception, and it’s not a one time thing, since as the libraries evolve, sensitive information can be introduced unintentionally (perhaps debugging something required it on some particular testing, and it was forgotten there).

BTW, what I meant with the language, is that no matter the language, you might unintentionally allow some sensitive information in the logs, because that’s not a syntactic error, and it’s not violating any compiling rules. It’s that something is logged that shouldn’t.

Also, the report indicates that the vulnerability can’t be exploited remotely, which reduces the risk for several systems…

The vulnerability has nothing to do with accidentally logging sensitive information, but crafting a special payload to be logged which gets glibc to write memory it isn’t supposed to write into because it didn’t allocate memory properly. glibc goes too far outside of the scope of its allocation and writes into other memory regions, which an attacked could carefully hand craft to look how they want.

Other languages wouldn’t have this issue because

-

they wouldn’t willy nilly allocate a pointer directly like this, but rather make a safer abstraction type on top (like a C++ vector), and

-

they’d have bounds checking when the compiler can’t prove you can go outside of valid memory regions. (Manually calling .at() in C++, or even better - using a language like rust which makes bounds checks default and unchecked access be opt in with a special method).

Edit: C’s bad security is well known - it’s the primary motivator for introducing rust into the kernel. Google / Microsoft both report 70% of their security vulnerabilities come from C specific issues, curl maintainer talks about how they use different sanitizers and best practices and still run into the same issues, and even ubiquitous and security critical libraries and tools like sudo + polkit suffer from them regularly.

I see, I didn’t dig into the cause, being sort of a buffer overflow. Indeed that would be prevented by other languages, sorry for my misinterpretation. Other vulnerabilities unintentionally introduced by developers on logging what shouldn’t are not dependent on anything other than auditing them, but that was not the case then.

-

musl is not a programming language

That’s why I said library or language (someone else suggested rust).

Yikes.

I’d switch to musl on all of my boxes if it weren’t that nearly all precompiled software (closed source, games mainly) are compiled against glibc.

Just use flatpak and podman, in a punch you can proot into a different system / zfs data set / btrfs sublime

So this means you need either Alpine repos or compile everything yourself?

Void offers musl too. Unless they’ve discontinued it.

But

compile everything yourself?

I do (almost) exactly that. I run Gentoo almost everywhere. The ‘almost’ is because Gentoo now offers an official bin repository too, so I can mix compiled and pre-compiled software. (Although you’ve always had the option to set up your own binary host).

How are you going to run steam though? Like at least alpine has wine, but there’s no way to recompile steam unfortunately.

I was never into gaming really, and while I like the progress I dont really care tbh?

Nope. I mainly get my games (curretly around 10 only) from gog.

Don’t forget Chimera Linux ( not to be confused with Chimeraos)!

That’s why you need to rock and roll

(Arch btw.)

Try having

unattended-upgradeswith a rolling distro.I don’t want unattended upgrades >:/

Just don’t upgrade for a while and you become debian

It’s not like windows forcing you to reboot every Tuesday so Edge can come back

you shouldn’t be throwing boots through your windows

Man, I do this all the time. snapper and grub-btrfs has enabled all kinds of amazing things. I’m so close to just doing:

$ sudo crontab -l * * 3 * * pacman -Syu --no-confirmI’ve got separate offline backups and rescue disks, but I’m pretty confident that grub-btrfs will let me recover pretty quickly.

grub-btrfs with timeshift didn’t helped me in my upgrade from fedora 38 to 39, when i rolled back with grub-btrfs, what loaded was weird mix of 38 and 39, that didn’t even let me browse my filesystem, got to disassemble laptop, get out ssd, use it as external, and even then half of the ssd was locked, ssd was new and chmod didn’t helped, even from live usb, had to copy files with testdisk and dd zero’s on whole disk for it to work again

deleted by creator

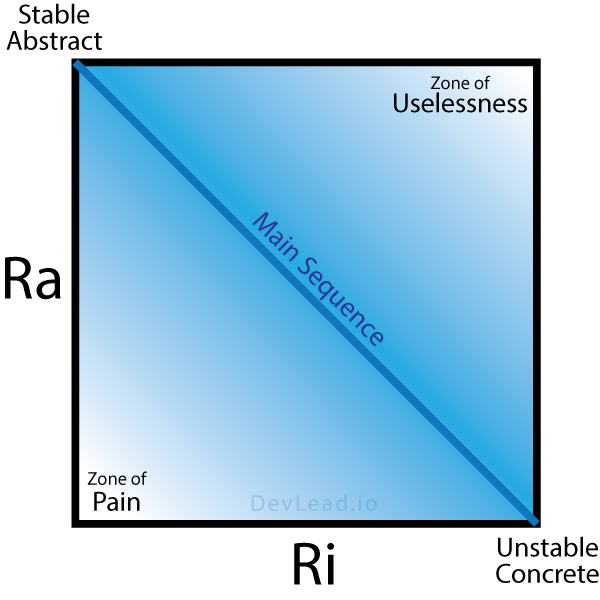

Databases are the definition of software dependancy hell. They exist in the zone of pain. I love Postgres; I hate administering Postgres.

I replied to another comment with this, but Debian 12(stable, bookworm) and 13(testing, trixie) are affected by this but 12(stable, bookworm) has a patch out in the security repo.

If you wanna know wether or not you’re affected,

apt list libc

will show your version and the one you want is 2.36-9+deb12u4

If you don’t have that,

apt update && apt upgrade

will straighten you out

13(testing, trixie) has 2.37, but it’s not fixed yet.

E: Edited to use apt list instead of apt show.

RedHat and related: https://access.redhat.com/security/cve/CVE-2023-6246

Not affected

Not affected

Not affected

etc.

Ubuntu 22.04 LTS, Linux Mint etc as well. also this is a local one, so not many people care.

Local attacker

Important detail

as i said on reddit

Glad I only run Alpine

“GNU Library C?”

I’d just like to interject for a moment. What you call “GNU Library C” is actually GNU with Linux library C and some C++ for those nifty templates, or as we like to call it “GNU/Linux Library C/C++”. Which, to be honest, it’s more like “GNU/Linux Library C/C-with-Classes” the way they’re teaching it at school, oh well.

Carry on.

I was, it seems, too subtle. I don’t call it “GNU Library C”. Nobody does. I call it the “GNU C library”. It’s weird that the author calls it that.

Aaaaaand I somehow missed that.

Then again, wouldn’t have changed much. I’d just infodumped you on the GNU/Linux C Library, or as we sometimes call it the GNU plus Linux C library with macros.

Aaaaaand I somehow missed that.

Not the only one it seems - that’s on me.

GNU plus Linux C library with macros.

🤣

GNU C Library(glibc) as apposed to C Library (libc).

Who calls it “GNU Library C” though?