Why

I’m running a ZFS pool of 4 external USB drives. It’s a mix of WD Elements and enclosed IronWolfs. I’m looking to consolidate it into a single box since I’m likely to add another 4 drives to it in the near future and dealing with 8 external drives could become a bit problematic in a few ways.

ZFS with USB drives

There’s been recurrent questions about ZFS with USB. Does it work? How does it work? Is it recommended and so on. The answer is complicated but it revolves around - yes it works and it can work well so long as you ensure that anything on your USB path is good. And that’s difficult since it’s not generally known what USB-SATA bridge chipset an external USB drive has, whether it’s got firmware bugs, whether it requires quirks, is it stable under sustained load etc. Then that difficulty is multiplied by the number of drives the system has. In my setup for example, I’ve swapped multiple enclosure models till I stumbled on a rock-solid one. I’ve also had to install heatsinks on the ASM1351 USB-SATA bridge ICs in the WD Elements drives to stop them from overheating and dropping dead under heavy load. With this in mind, if a multi-bay unit like the OWC Mercury Elite Pro Quad proves to be as reliable as some anecdotes say, it could become a go-to recommendation for USB DAS that eliminates a lot of those variables, leaving just the host side since it comes with a cable too. And the host side tends to be reliable since it’s typically either Intel or AMD. Read ##Testing for some tidbits about AMD.

Initial observations of the OWC Mercury Elite Pro Quad

- Built like a tank, heavy enclosure, feet screwed-in not glued

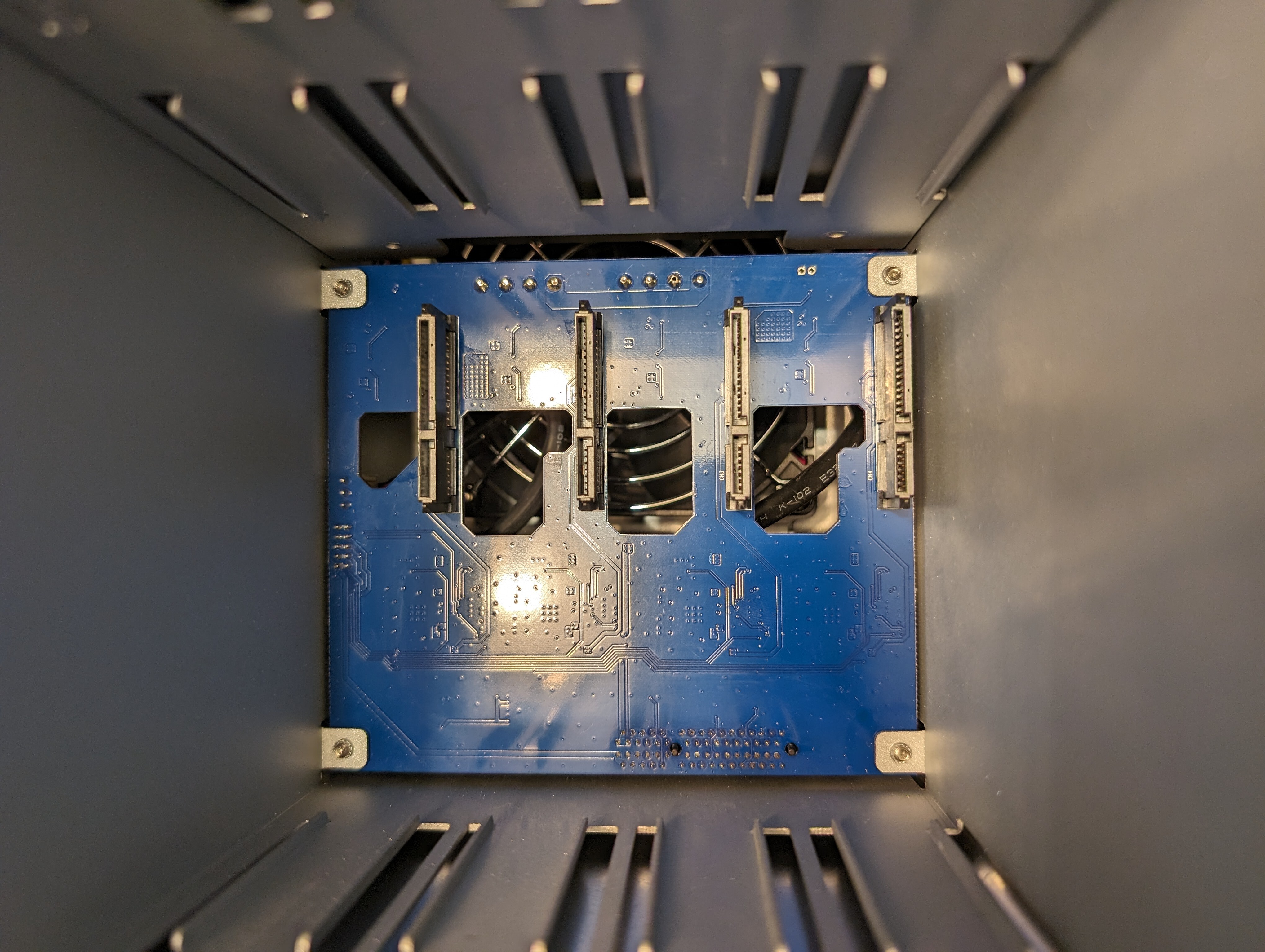

- Well designed for airflow. Air enters the front, goes through the disks, PSU, main PCB and exits from the back. Some IronWolf that averaged 55°C in individual enclosures clock at 43°C in here

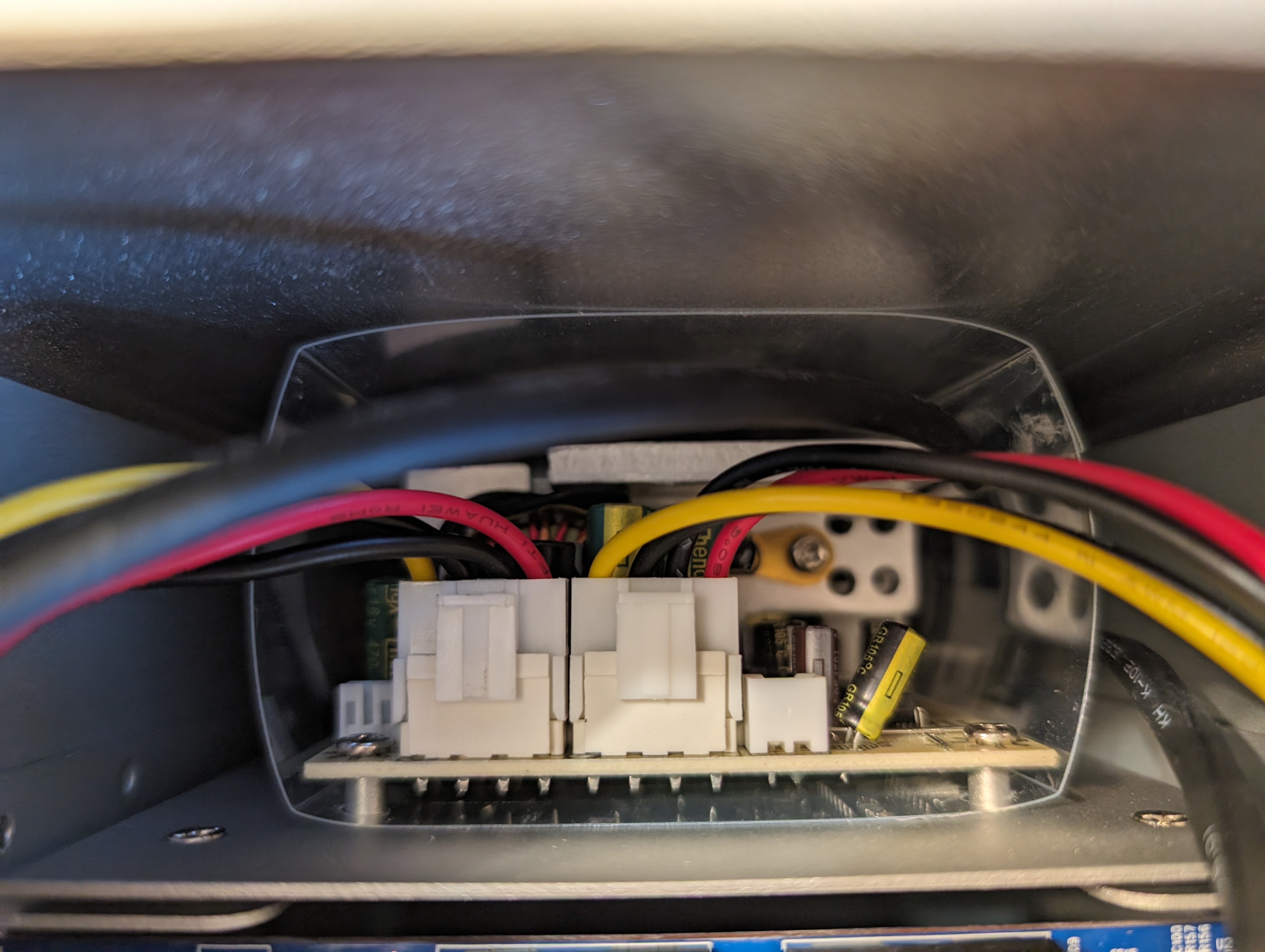

- It’s got a Good Quality DC Fan (check pics). So far it’s pretty quiet

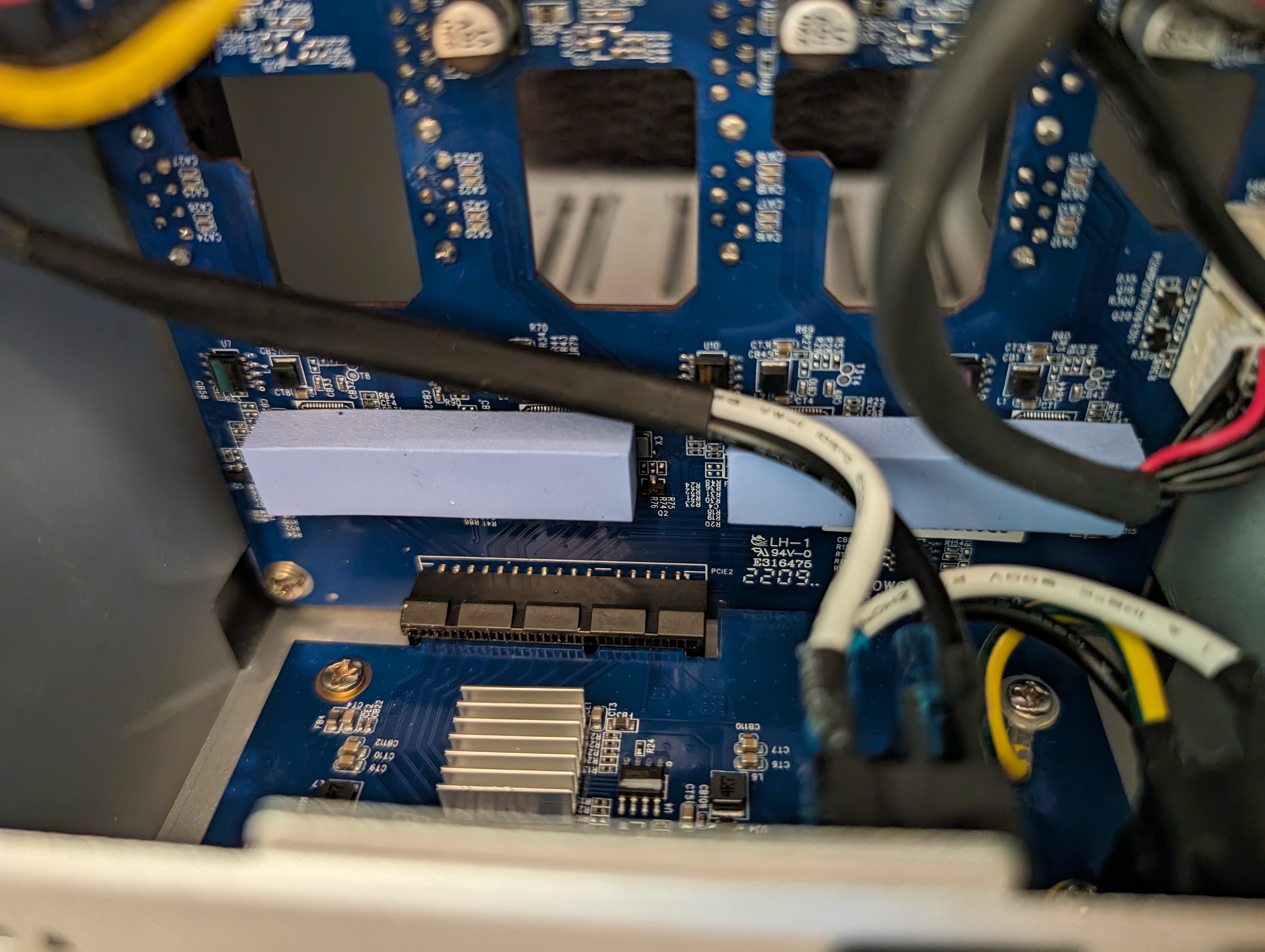

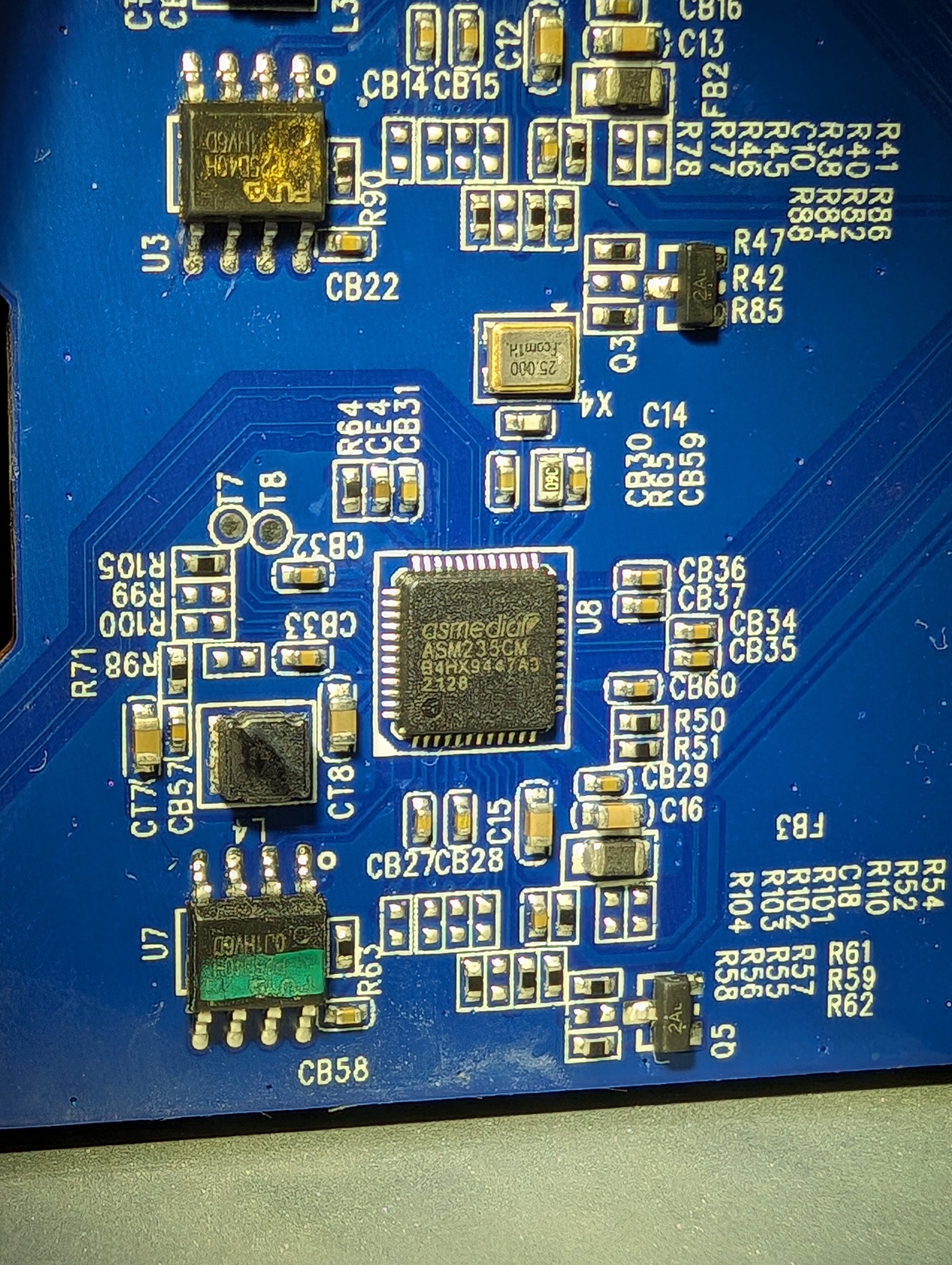

- Uses 4x ASM235CM USB-SATA bridge ICs which are found in other well-regarded USB enclosures. It’s newer than the ASM1351 which is also reliable when not overheating

- The USB-SATA bridges are wired to a USB 3.1 Gen 2 hub - VLI-822. No SATA port multipliers

- The USB hub is heatsinked

- The ASM235CM ICs have a weird thick thermal pad attached to them but without any metal attached to it. It appears they’re serving as heatsinks themselves which might be enough for the ICs to stay within working temps

- The main PCB is all-solid-cap affair

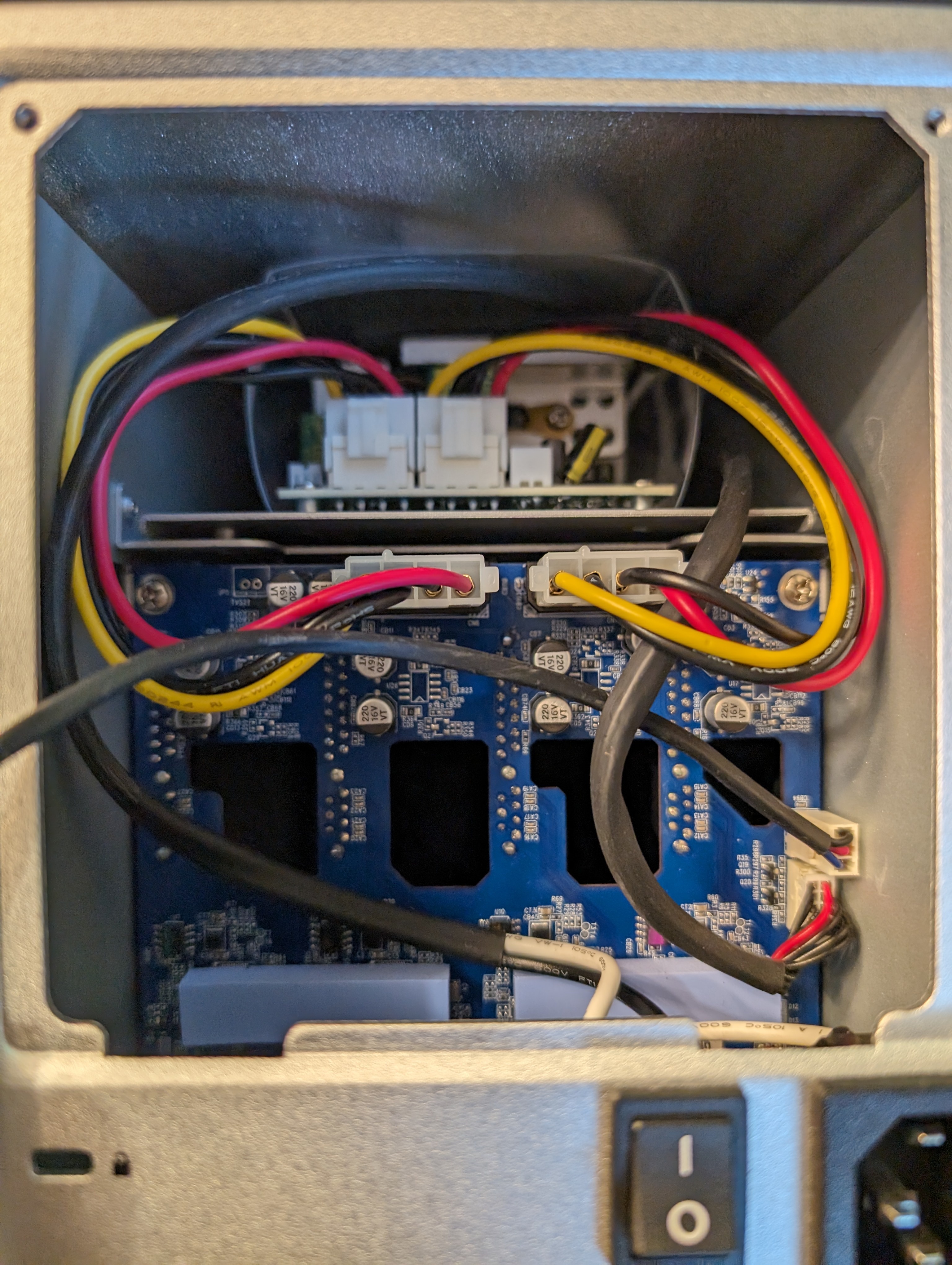

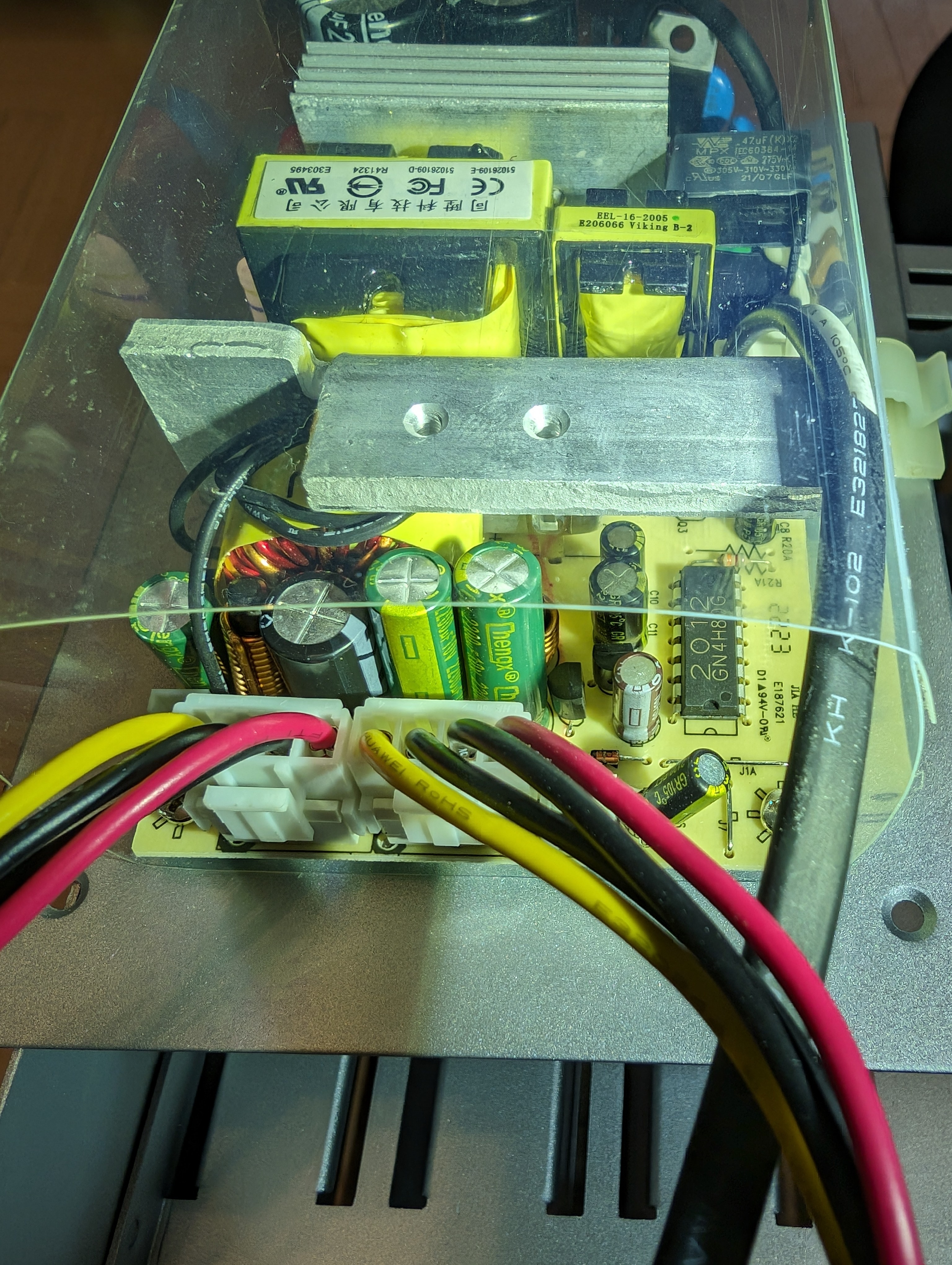

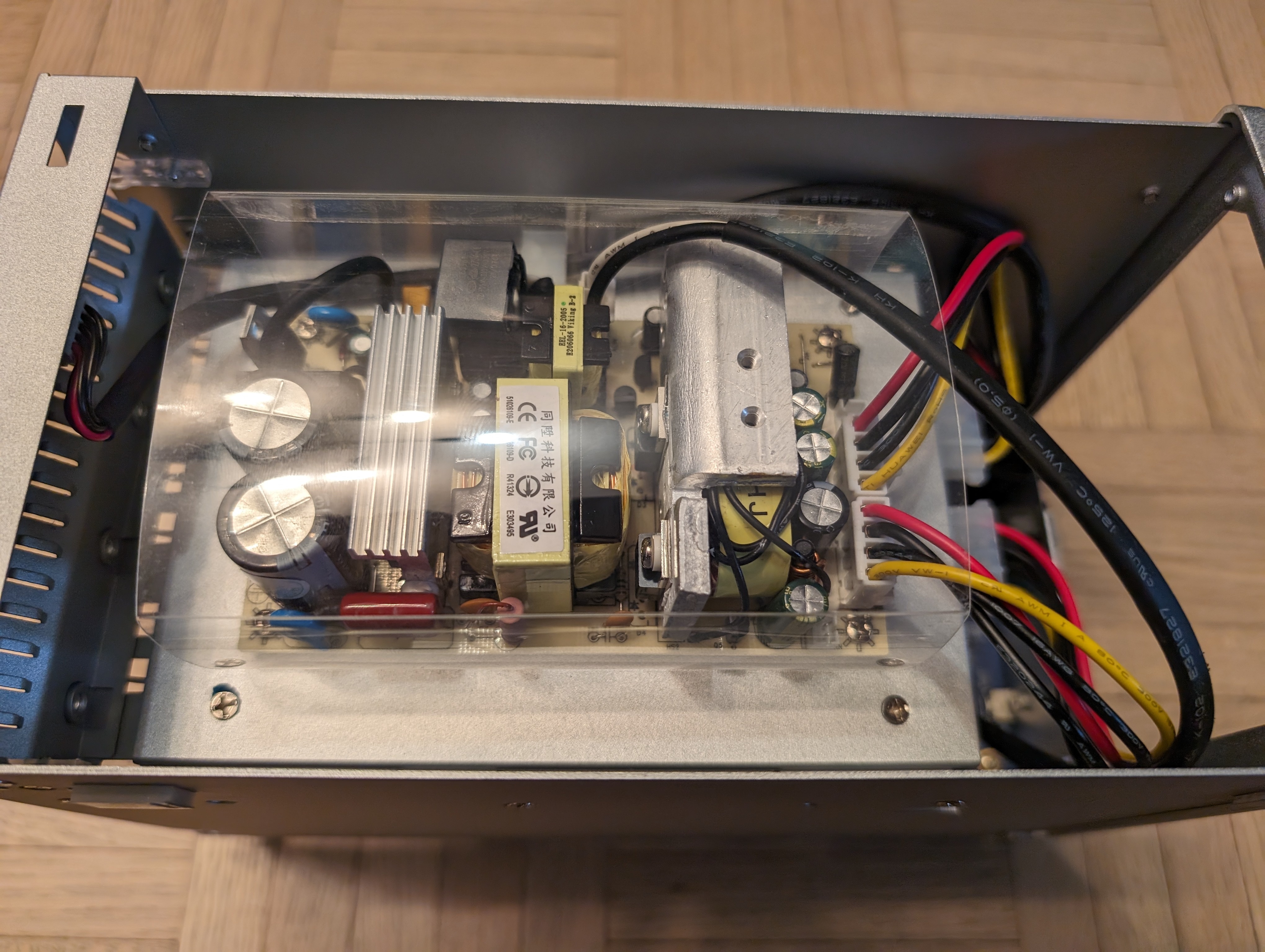

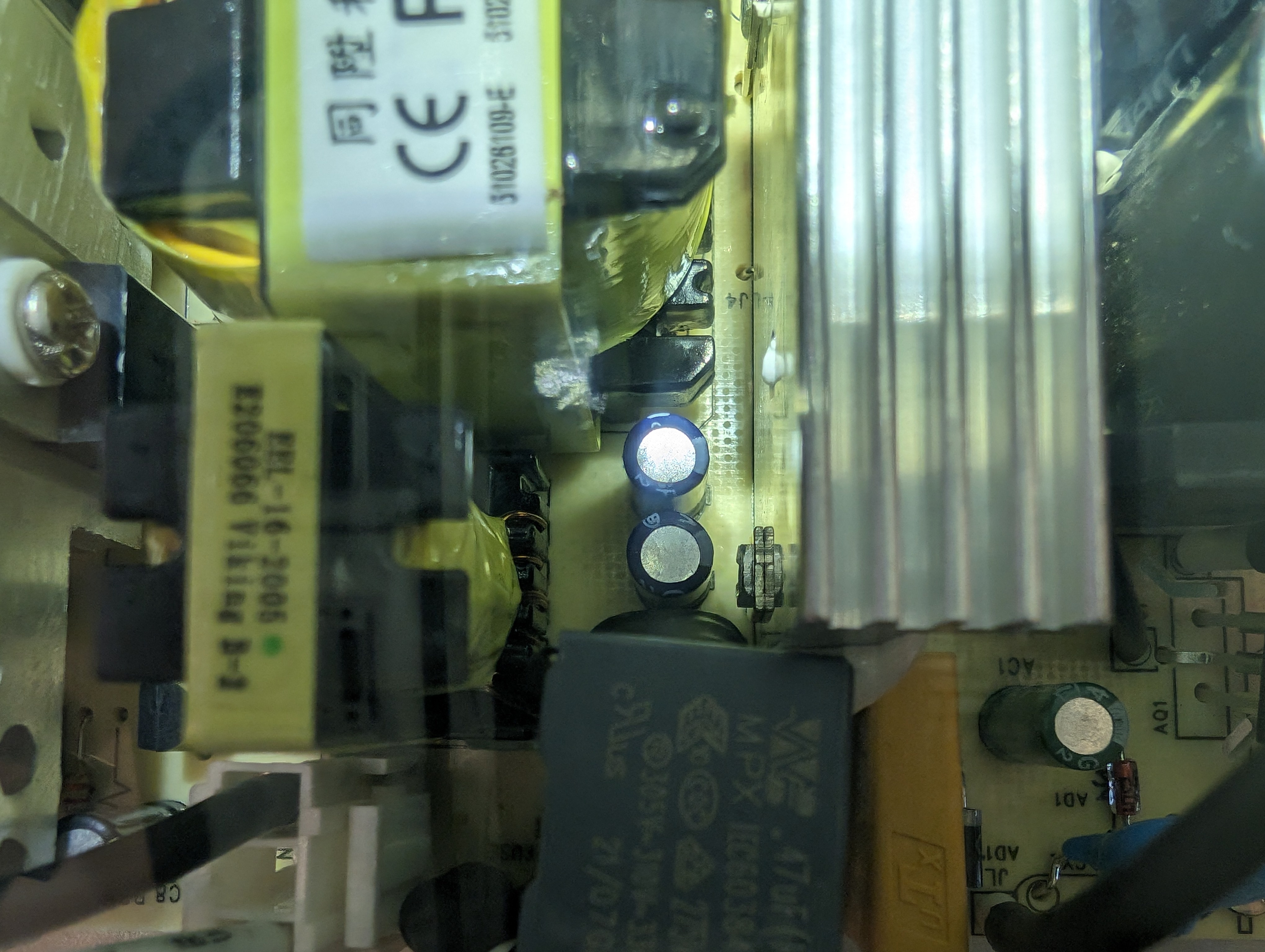

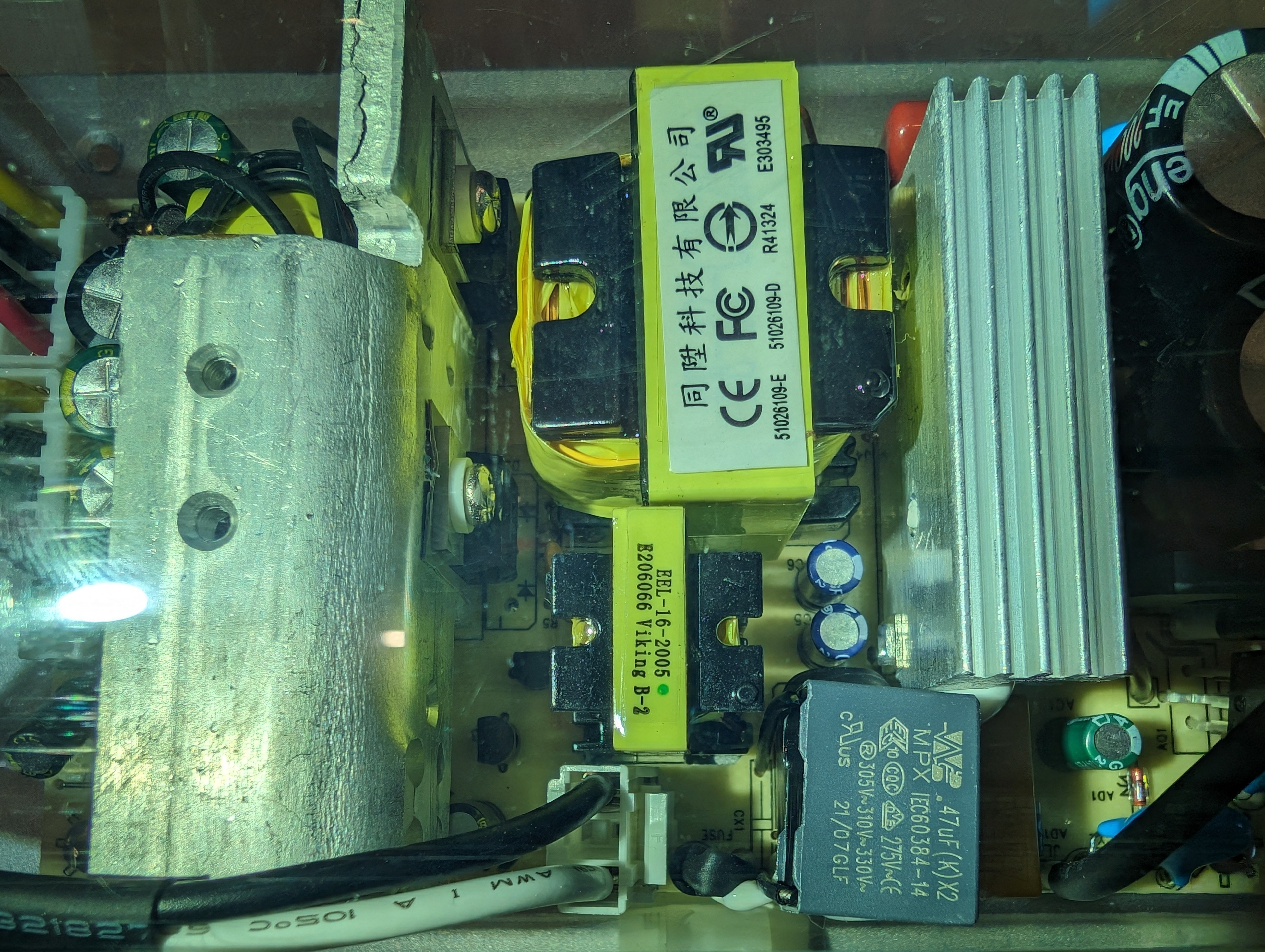

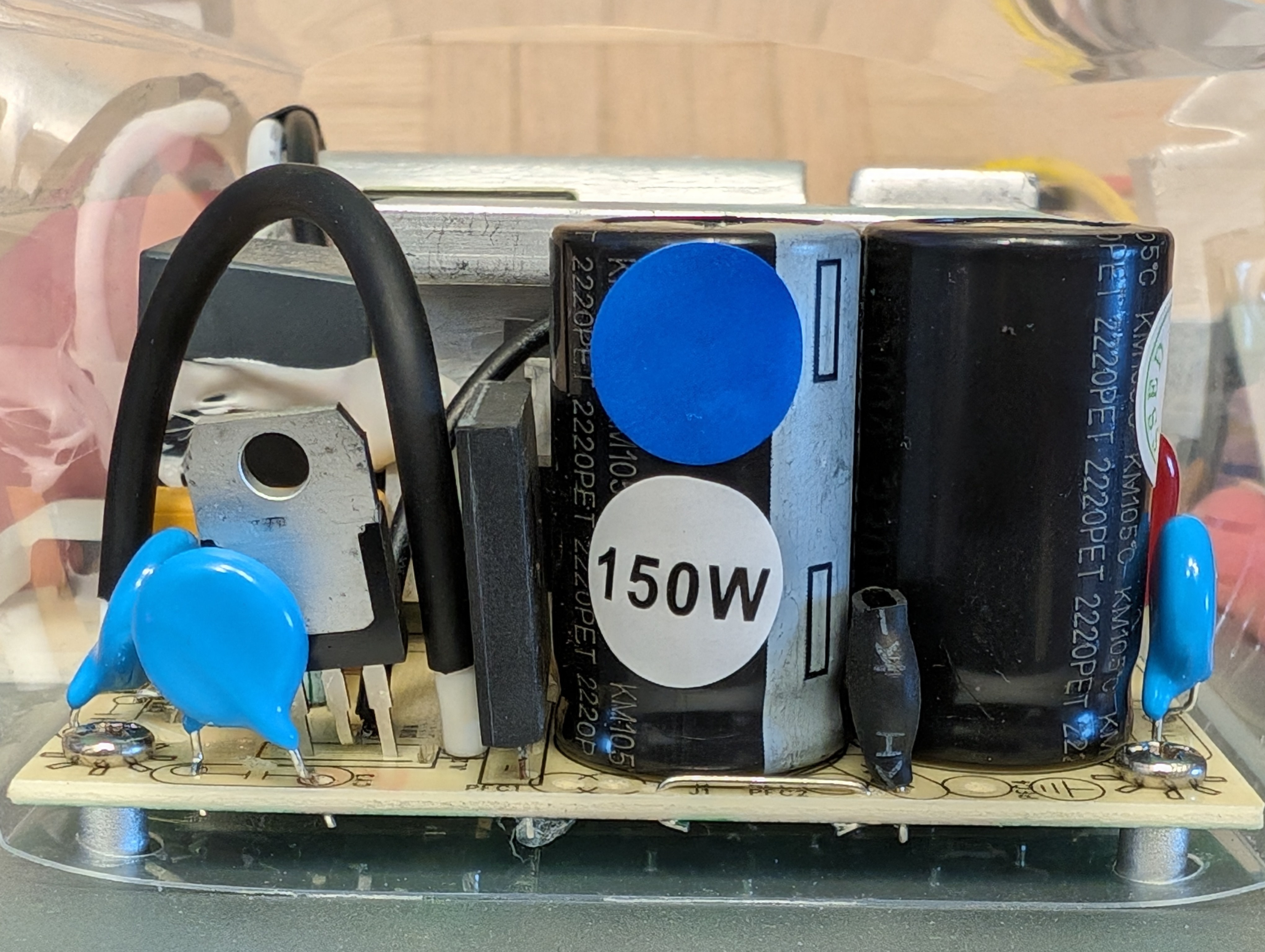

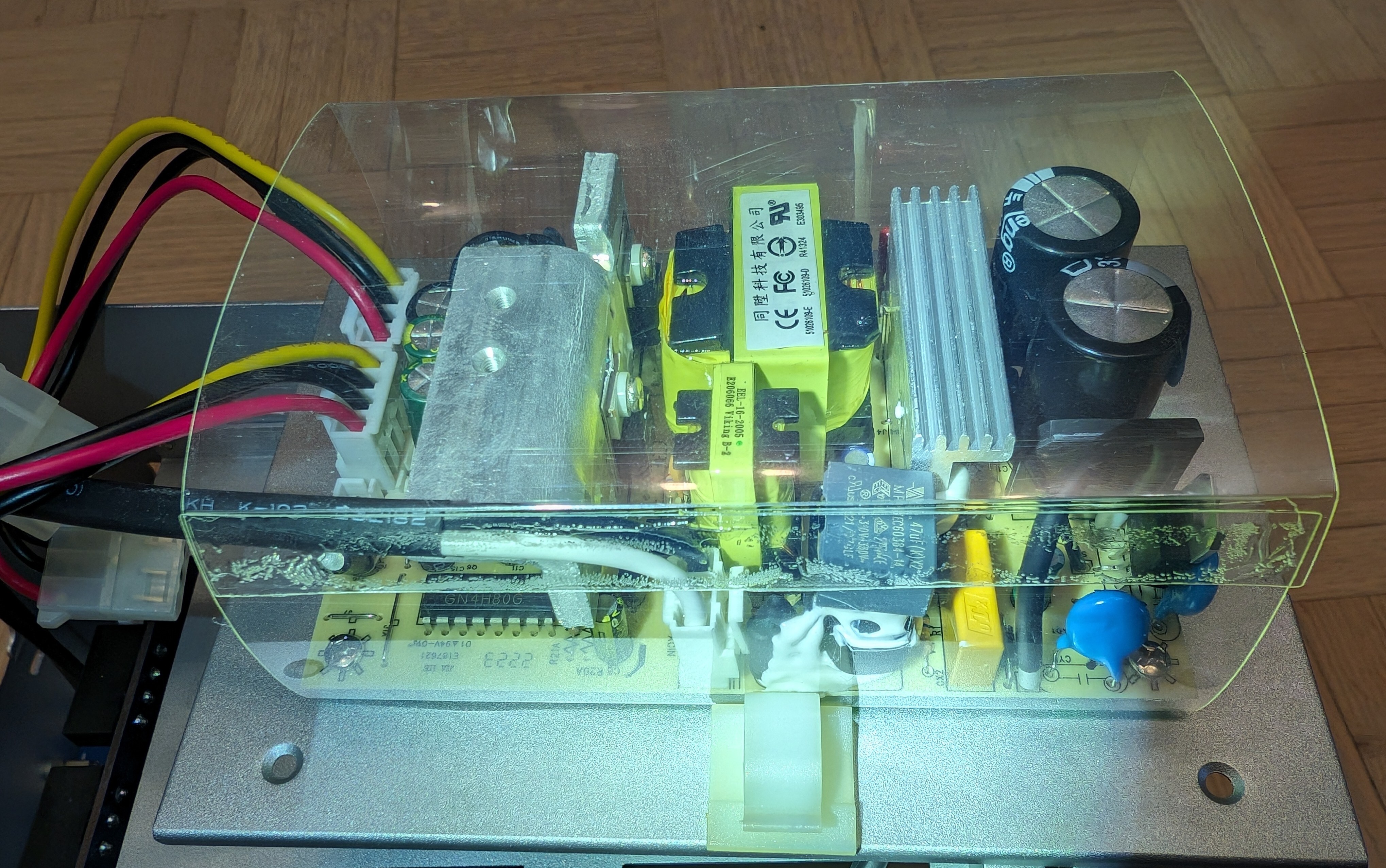

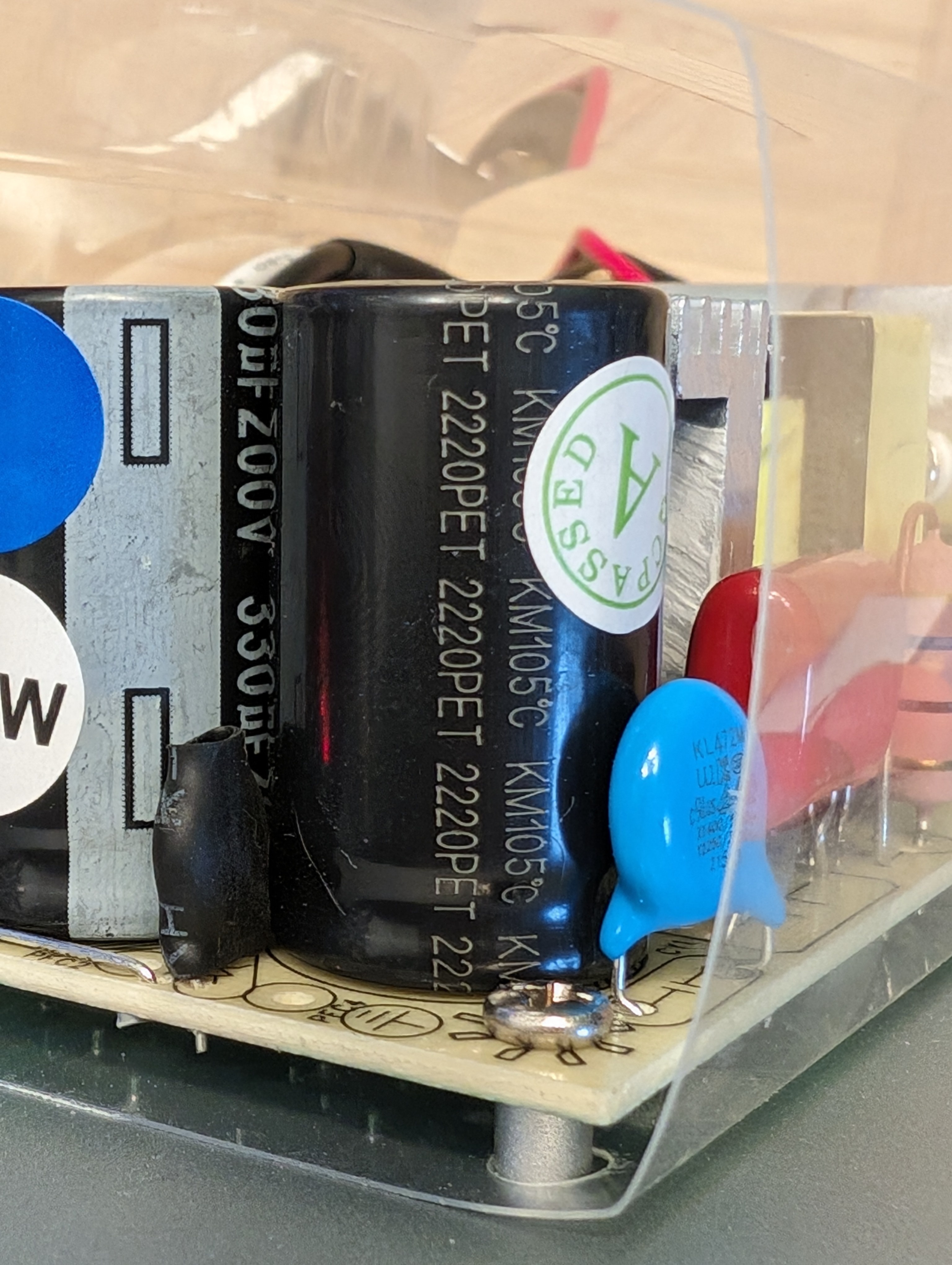

- The PSU shows electrolytic caps which is unsurprising

- The main PCB is connected to the PSU via standard molex connectors like the ones found in ATX PSUs. Therefore if the built-in PSU dies, it could be replaced with an ATX PSU

- It appears to rename the drives to its own “Elite Pro Quad A/B/C/D” naming, however

hdparm -I /dev/sdaseems to return the original drive information. The disks appear with their internal designations in GNOME Disks. The kernel maps them in/dev/disks/by-id/*according to those as before. I moved my drives in it, rebooted and ZFS started the pool as if nothing happened - SMART info is visible in GNOME Disks as well as

smartctl -x /dev/sda - It comes with both USB-C to USB-C cable and USB-C to USB A

- Made in Taiwan

Testing

- No errors in the system logs so far

- I’m able to pull 350-370MB/s sequential from my 4-disk RAIDz1

- Loading the 4 disks together with

hdparmresults in about 400MB/s total bandwidth - It’s hooked up via USB 3.1 Gen 1 on a B350 motherboard. I don’t see a significant difference in the observed speeds whether it’s on the chipset-provided USB host, or the CPU-provided one

- Completed a manual scrub of a 24TB RAIDz1 while also being loaded with an Immich backup, Plex usage, Syncthing rescans and some other services. No errors in the system log. Drives stayed under 44°C. Stability looks promising

- Will pull a drive and add a new one to resilver once the latest changes get to the off-site backup

- Pulled a drive from the pool and replaced it with a spare while the pool was live. SATA hot plugging seems to work. Resilvered 5.25TB in about 32 hours while the pool was in use. Found the following vomit in the logs repeating every few minutes:

Apr 01 00:31:08 host kernel: scsi host11: uas_eh_device_reset_handler start

Apr 01 00:31:08 host kernel: usb 6-3.4: reset SuperSpeed USB device number 12 using xhci_hcd

Apr 01 00:31:08 host kernel: scsi host11: uas_eh_device_reset_handler success

Apr 01 00:32:42 host kernel: scsi host11: uas_eh_device_reset_handler start

Apr 01 00:32:42 host kernel: usb 6-3.4: reset SuperSpeed USB device number 12 using xhci_hcd

Apr 01 00:32:42 host kernel: scsi host11: uas_eh_device_reset_handler success

Apr 01 00:33:54 host kernel: scsi host11: uas_eh_device_reset_handler start

Apr 01 00:33:54 host kernel: usb 6-3.4: reset SuperSpeed USB device number 12 using xhci_hcd

Apr 01 00:33:54 host kernel: scsi host11: uas_eh_device_reset_handler success

Apr 01 00:35:07 host kernel: scsi host11: uas_eh_device_reset_handler start

Apr 01 00:35:07 host kernel: usb 6-3.4: reset SuperSpeed USB device number 12 using xhci_hcd

Apr 01 00:35:07 host kernel: scsi host11: uas_eh_device_reset_handler success

Apr 01 00:36:38 host kernel: scsi host11: uas_eh_device_reset_handler start

Apr 01 00:36:38 host kernel: usb 6-3.4: reset SuperSpeed USB device number 12 using xhci_hcd

Apr 01 00:36:38 host kernel: scsi host11: uas_eh_device_reset_handler success

It appears to be only related to the drive being resilvered. I did not observe resilver errors

- Resilvering

iostatshows numbers in-line with the 500MB/s of the the USB 3.1 Gen 1 port it’s connected to:

tps kB_read/s kB_wrtn/s kB_dscd/s kB_read kB_wrtn kB_dscd Device

314.60 119.9M 95.2k 0.0k 599.4M 476.0k 0.0k sda

264.00 119.2M 92.0k 0.0k 595.9M 460.0k 0.0k sdb

411.00 119.9M 96.0k 0.0k 599.7M 480.0k 0.0k sdc

459.40 0.0k 120.0M 0.0k 0.0k 600.0M 0.0k sdd

- Running a second resilver on a chipset-provided USB 3.1 port while looking for USB resets like previously seen in the logs. The hypothesis is that here’s instability with the CPU-provided USB 3.1 ports as there have been documented problems with those

- I had the new drive disconnect upon KVM switch, where the KVM is connected to the same same chipset-provided USB controller. Moved the KVM to the CPU-provided controller. This is getting fun

- Got the same resets as the drive began the sequential write phase:

Apr 02 16:13:47 host kernel: scsi host11: uas_eh_device_reset_handler start

Apr 02 16:13:47 host kernel: usb 6-2.4: reset SuperSpeed USB device number 9 using xhci_hcd

Apr 02 16:13:47 host kernel: scsi host11: uas_eh_device_reset_handler success

- 🤦 It appears that I read the manual wrong. All the 3.1 Gen 1 ports on the back IO are CPU-provided. Moving to a chipset-provided port for real and retesting… The resilver entered its sequential write phase and there’s been no resets so far. The peak speeds are a tad higher too:

tps kB_read/s kB_wrtn/s kB_dscd/s kB_read kB_wrtn kB_dscd Device

281.80 130.7M 63.2k 0.0k 653.6M 316.0k 0.0k sda

273.00 130.1M 56.8k 0.0k 650.7M 284.0k 0.0k sdb

353.60 130.8M 63.2k 0.0k 654.0M 316.0k 0.0k sdc

546.00 0.0k 133.2M 0.0k 0.0k 665.8M 0.0k sdd

- Resilver finished. No resets or errors in the system logs

- Did a second resilver. Finished without errors again

- Resilver while connected to the chipset-provided USB port takes around 18 hours for the same disk that took over 30 hours via the CPU-provided port

Verdict so far

The OWC passed all of the testing so far with flying colors. Even though resilver finished successfully, there were silent USB resets in the logs with the OWC connected to CPU-provided ports. Multiple ports exhibited the same behavior. When connected to a B350 chipset-provided port on the other hand the OWC finished two resilvers with no resets and faster, 18 hours vs 32 hours. My hypothesis is that these silent resers are likely related to the known USB problems with Ryzen CPUs. The OWC itself passed testing with flying colors when connected to a chipset port.

I’m buying another one for the new disks.

Pics

General

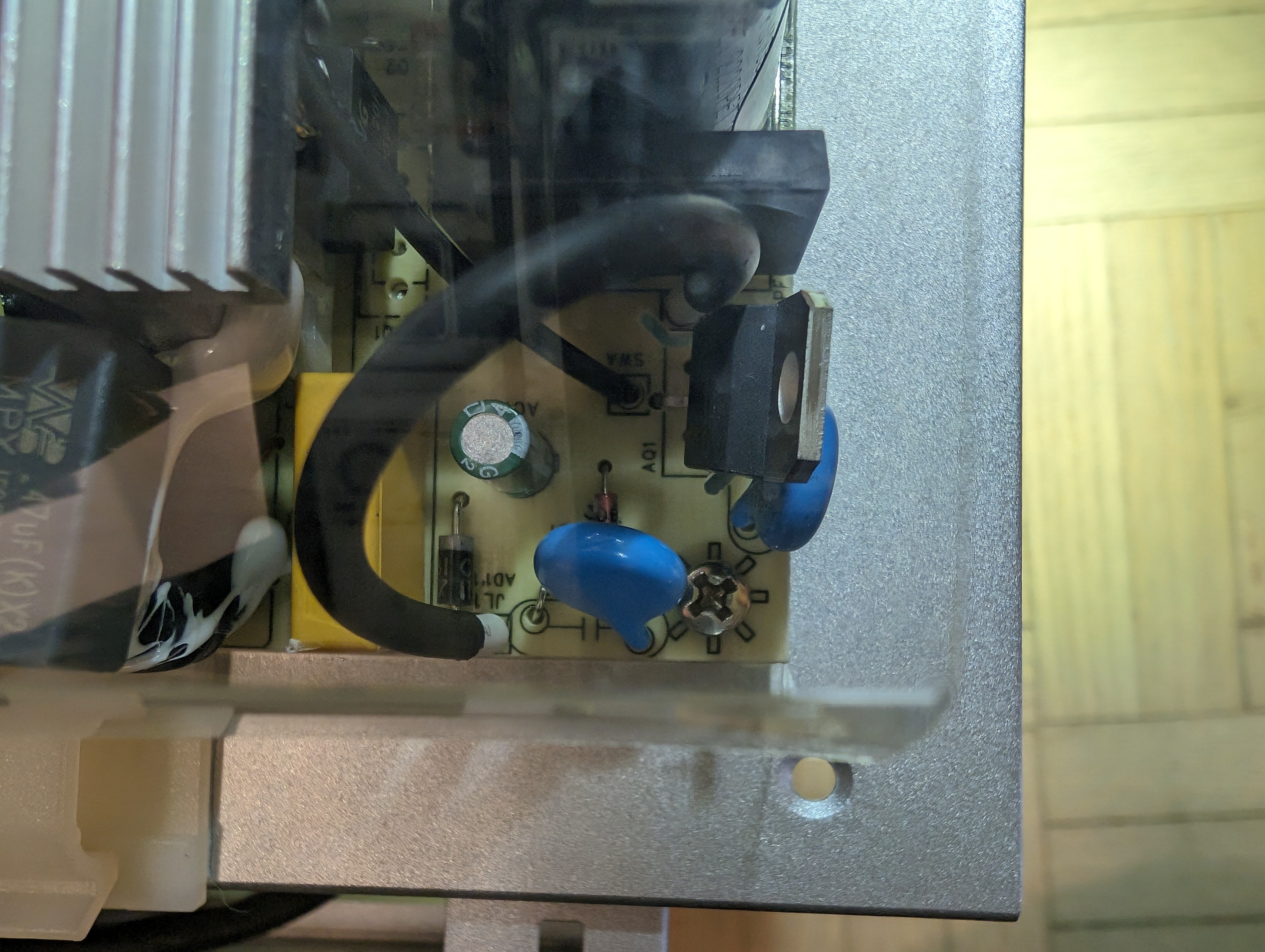

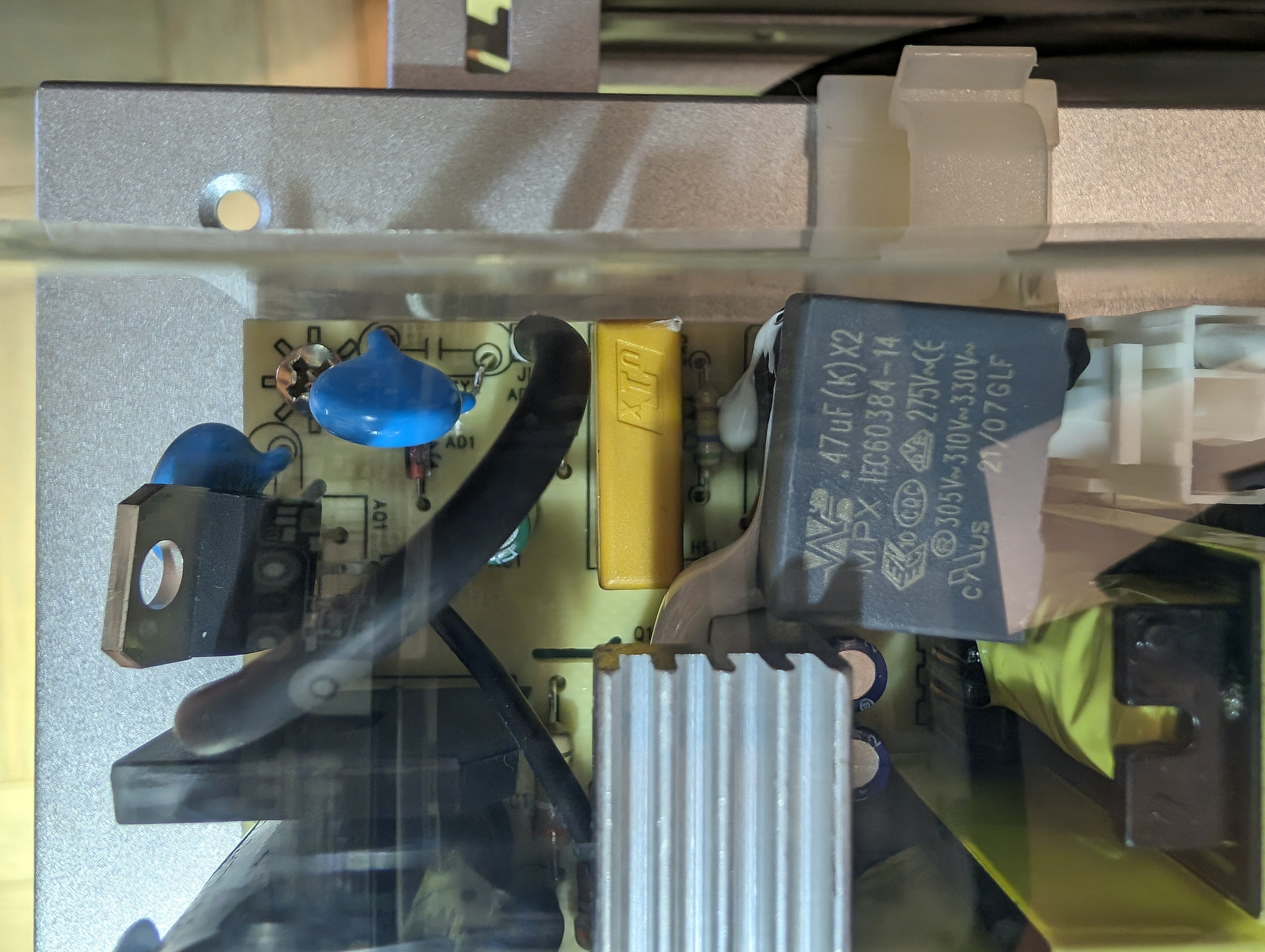

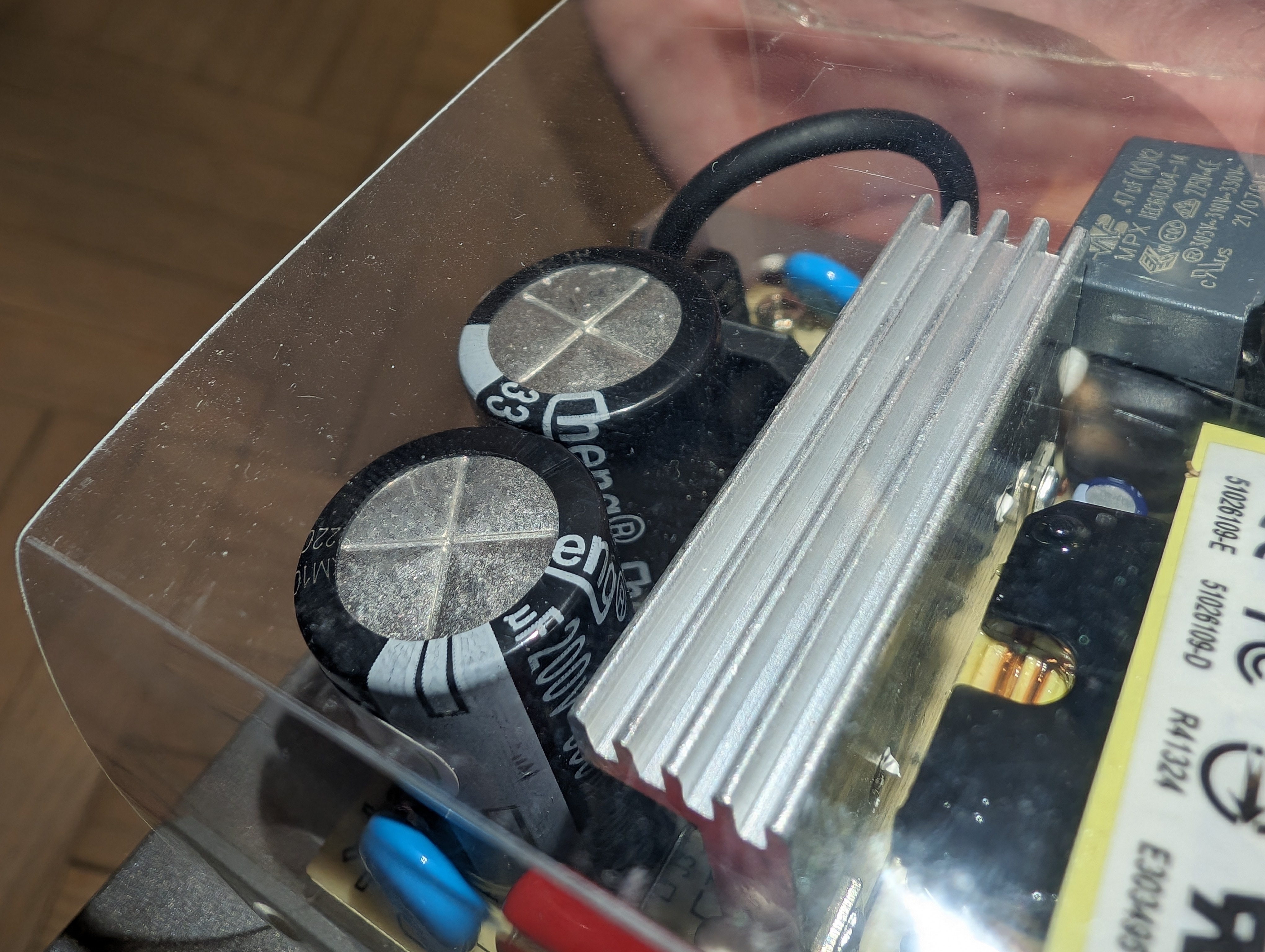

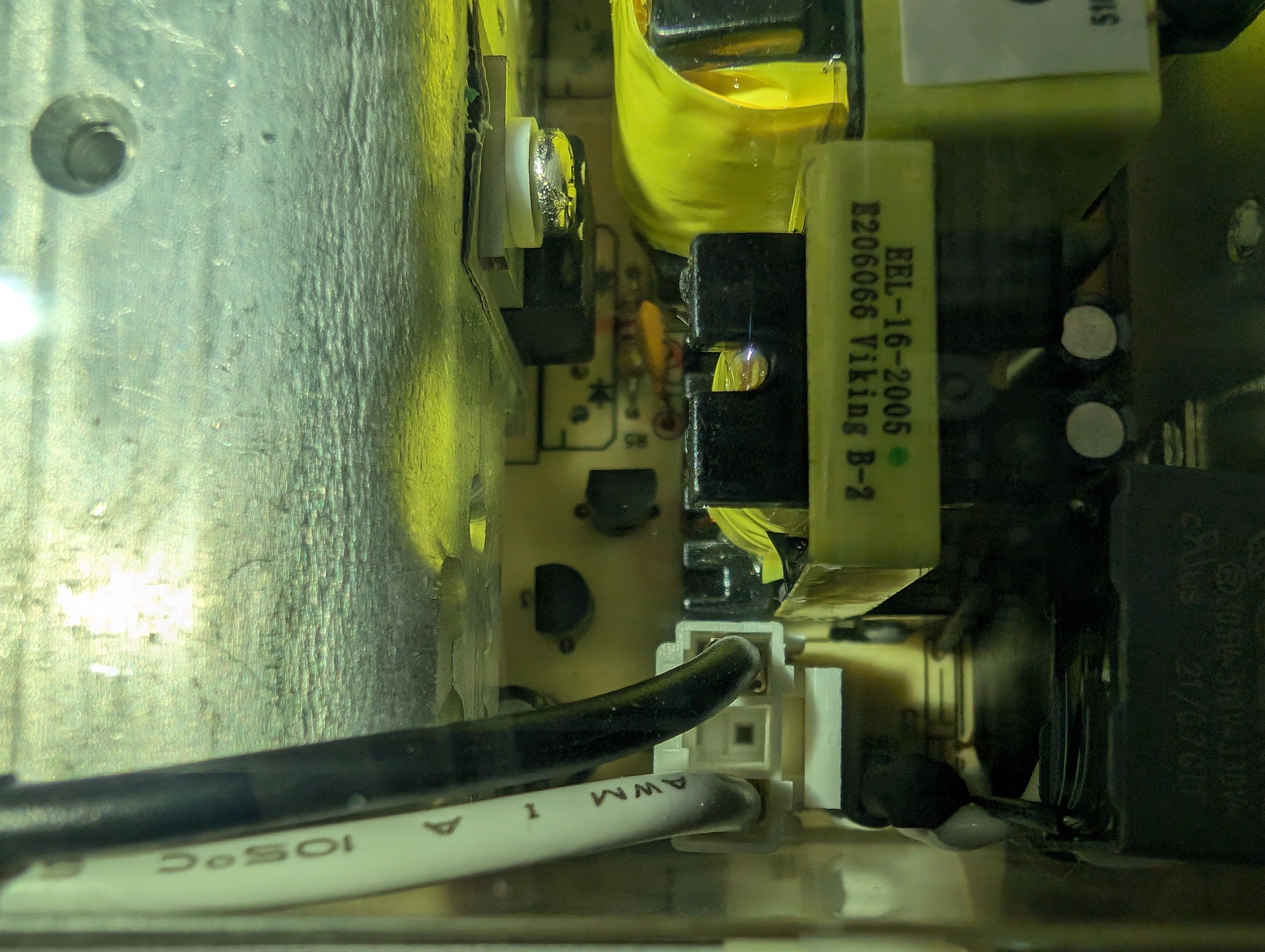

PSU

I was wondering what would be better for discoverability, to write this in a blog post, on GitHub, then link it here, or to just write it here. Turns out Google’s crawling Lemmy quite actively. This shows up within the first 10-15 results for “USB DAS ZFS”:

It appears that Lemmy is already a good place for writing stuff like this. ☺️

And now it no longer shows up.

What CPU are you using? And how much RAM are you using? It looks cool and solid though.

5950X, 64GB

It’s a multipurpose machine, desktop workstation, games, running various servers.

Thanks for the analysis and taking time to share!

Great analysis, can you share photos of the power supply PCB? That’s usually a weak point on those kinds of units and also the reason why disks fail often - cheap power supplies that don’t properly filter power spikes and you hard drives burn.

I wasn’t able to reach it for a top-down visual through the back and so I don’t have pics of it other than the side view already attached. I’ll try disassembling it further sometime today, once the ZFS scrub completes.

Luckily, the PSU is connected to the main board via standard molex. If the built-in one blows up, you could replace it with any ATX PSU, large, small (FlexATX, etc), or one of those power bricks that spit out a 5V/12V molex. Whether you can stuff it in or not.

Thanks for the feedback! It does seem to be very deep in there.

Finally happy with the testing. I’ll disassemble it sometime today.

Go go go!

Done. Says 150W on it. Not sure if it’s real. If it is, then it’s plenty overrated for the hardware which should bode well for its longevity. Especially given that the caps are Chengx across the board so definitely not the best. :D Can you tell anything interesting about it from the pics?

Seems reasonable, unlike ultra cheap Chinese power supplies they added the bare minimum isolation and should be able to handle a few spikes here and there. Bunch of components that you can’t get datasheets on but all things considered I’ve seen and had worse.

Yeah, I googled that IC, no hits. 😂

I see a number of dual-output PSUs on Mouser that will probably fit well if this goes. For example.

That is very expensive. Why not just get a case that’ll fit 4 drives and a HBA in IT mode for a quarter the price?

Please elaborate.

What I parse that you’re talking about is a PCIe SATA host controller and a box for the disks. Prior to landing on the OWC, I looked at QNAP’s 4-bay solution that does this - the TL-D400S. That can be found around the $300 mark. The OWC is $220 from the source. That’s roughly equivalent to 4 of StarTech’s enclosures that use the same chipset.

Exactly. You’re connecting this to a PC of some sort, right? So why not just put the disks in the case? The OCW on uk amazon is £440, which isn’t far off what I spent on my server build.

440 pounds is insane, agreed. 😂

Yeah I get it then. So it depends on whether one has PCIe slots available, 3.5" bays in the case, whether they can change the case if full, etc. It could totally make sense to do under certain conditions. In my case there’s no space in my PC case and I don’t have any PCIe slots left. In addition, I have an off-site machine that’s an USFF PC which has no PCIe slots or SATA ports. It’s only available connectivity is USB. So in my case USB is what I can work with. As long as it isn’t exorbitantly expensive, a USB solution has flexibility in this regard. I would have never paid 440 pounds for this if that was the price. I’d have stayed with single enclosures nailed to a wooden board and added a USB hub. 🥹 Which is how they used to be:

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I’ve seen in this thread:

Fewer Letters More Letters NAS Network-Attached Storage PCIe Peripheral Component Interconnect Express PSU Power Supply Unit SATA Serial AT Attachment interface for mass storage ZFS Solaris/Linux filesystem focusing on data integrity

5 acronyms in this thread; the most compressed thread commented on today has 10 acronyms.

[Thread #639 for this sub, first seen 29th Mar 2024, 21:35] [FAQ] [Full list] [Contact] [Source code]